Introduction

We develop deep learning based methods for the automatic detection and analysis of anomalies in images with a limited amount of training data. For example, we are analyzing and defining different types of damages that can be present in big structures and using methods based on deep learning to detect and localize them in images taken by unmanned vehicles. Some of the problems arising in this task are the unclear definition of what constitutes a damage or its exact extension, the difficulty of obtaining quality data and labeling it, the consequent lack of abundant data for training and the great variability of appearance of the targets to be detected, including the underrepresentation of some particular types.

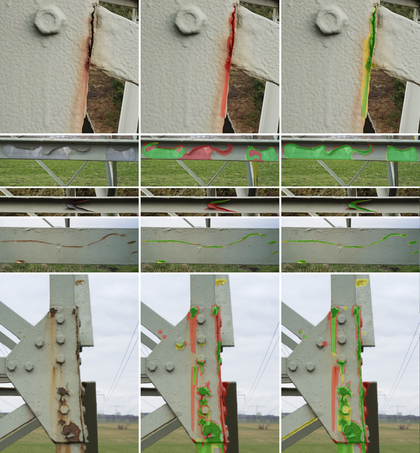

Automated Damage Inspection of Power Transmission Towers from UAV Images

Infrastructure inspection is a very costly task, requiring technicians to access remote or hard-to-reach places. This is the case for power transmission towers, which are sparsely located and require trained workers to climb them to search for damages. Recently, the use of drones or helicopters for remote recording is increasing in the industry, sparing the technicians this perilous task. This, however, leaves the problem of analyzing big amounts of images, which has great potential for automation. This is a challenging task for several reasons. First, the lack of freely available training data and the difficulty to collect it complicate this problem. Additionally, the boundaries of what constitutes a damage are fuzzy, introducing a degree of subjectivity in the labelling of the data. The unbalanced class distribution in the images also plays a role in increasing the difficulty of the task. This paper tackles the problem of structural damage detection in transmission towers, addressing these issues. Our main contributions are the development of a system for damage detection on remotely acquired drone images, applying techniques to overcome the issue of data scarcity and ambiguity, as well as the evaluation of the viability of such an approach to solve this particular problem.

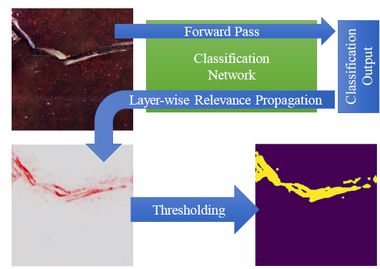

From Explanations to Segmentation: Using Explainable AI for Image Segmentation

The new era of image segmentation leveraging the power of Deep Neural Nets (DNNs) comes with a price tag: to train a neural network for pixel-wise segmentation, a large amount of training samples has to be manually labeled on pixel precision. In this work, we address this by following an indirect solution. We build upon the advances of the Explainable AI (XAI) community and extract a pixel-wise binary segmentation from the output of the Layer-wise Relevance Propagation (LRP) explaining the decision of a classification network. We show that we achieve similar results compared to an established U-Net segmentation architecture, while the generation of the training data is significantly simplified. The proposed method can be trained in a weakly supervised fashion, as the training samples must be only labeled on image-level, at the same time enabling the output of a segmentation mask. This makes it especially applicable to a wider range of real applications where tedious pixel-level labelling is often not possible.

Publications

Clemens Peter Seibold, Johannes Wolf Künzel, Anna Hilsmann, Peter Eisert

From Explanations to Segmentation: Using Explainable AI for Image Segmentation,

International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, virtual, February 2022 Arxiv

Aleixo Cambeiro Barreiro, Clemens Peter Seibold, Anna Hilsmann, Peter Eisert

Automated Damage Inspection of Power Transmission Towers from UAV Images,

International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, virtual, February 2022 Arxiv