The Fraunhofer HHI is a leading Research Institute for Mobile and Fixed Communications Networks and for future key applications. The Fraunhofer HHI has consistently orientated its fields of expertise towards present and future market and development demands.

Photonic Networks and Systems

The research focus of the department are high-performance optical transmission systems for use in in-house, access, metropolitan, wide-area and satellite communication networks. The focus is on increasing the capacity as well as improving security and energy efficiency.

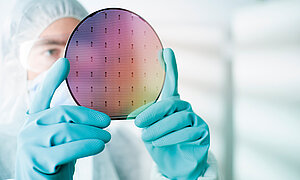

Photonic Components

The department develops optoelectronic semiconductor components as well as integrated optical circuits for data transmission. Another focus is on infrared sensor systems, terahertz spectroscopy and high-performance semiconductor lasers for industrial and medical applications.

Fiber Optical Sensor Systems

The research on novel photonic sensors used in measuring and control systems for early hazard detection, energy management, robotics and medical technology is a focus of the department. The sensors are characterized by extreme miniaturization, excellent communication and network capabilities and high energy efficiency.

Wireless Communications and Networks

The research focus is on radio-based information transmission. The department provides contributions to the theory and technical feasibility of radio systems and develops hardware prototypes. This is supplemented by scientific studies, simulations and evaluations at the link and system levels.

Video Communication and Applications

The main focus of the department is on the research and development of efficient algorithms for coding, transport, and storage as well as for processing of image and video signals. Another focus is on the development and deployment of implementations that demonstrate the benefits and potential of new coding and transmission technology for various applications.

Vision and Imaging Technologies

The focus here is on complex 2D/3D analysis and synthesis methods, on computer vision as well as innovative camera, sensor, display and projection systems. The department is researching for the entire video processing chain from content creation to playback.

Artificial Intelligence

The department develops high-performance, interpretable and efficient deep learning algorithms for various applications in multimedia and medical signal processing. The department’s main research topics are explainable and reliable AI, federated learning, and neural network compression.