Traditionally, 3D reconstruction from images has been approached by matching pixels between images, often based on photo-consistency constraints or learned features. Most approaches traverse different geometry representations like depth maps or point clouds before extracting a mesh. Recently, analysis-by-synthesis, a technique built around the rendering operation, has re-emerged as a promising direction for directly reconstructing objects with challenging materials under complex illumination.

We are investigating how analysis-by-synthesis based on neural geometry representations and neural rendering can be used in real-world multi-view reconstruction scenarios in the context of human body reconstruction and dynamic scenes.

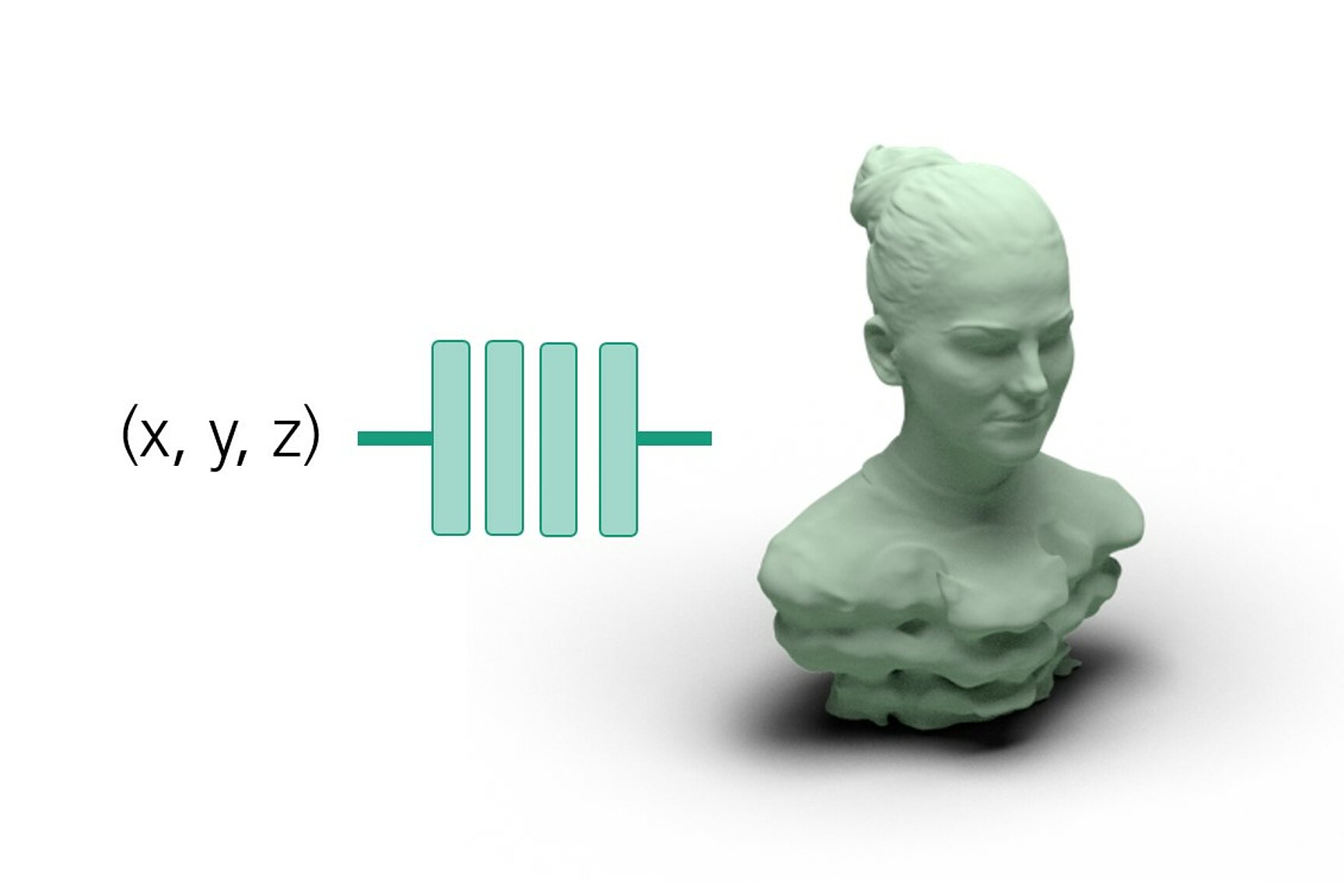

Recently, we presented a fast analysis-by-synthesis pipeline that combines triangle mesh surface evolution with neural rendering. At its core is a differentiable renderer based on deferred shading, which is inspired by real-time graphics: it first rasterizes the triangle mesh and then applies a neural shader that models the interaction of geometry, material, and light to the pixels. The rendered images are compared to the input images and due to the differentiability of the rendering pipeline, we can optimize the neural shader and the mesh with gradient descent.