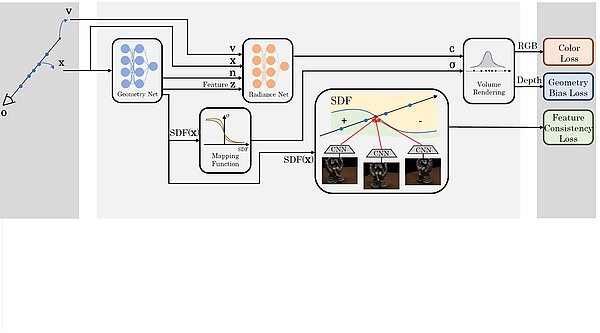

Recovering Fine Details for Neural Implicit Surface Reconstruction

In this paper, we present D-NeuS, a volume rendering-based neural implicit surface reconstruction method capable to recover fine geometry details, which extends NeuS by two additional loss functions targeting enhanced reconstruction quality.

Continuous intraoperative perfusion monitoring of free microvascular anastomosed fasciocutaneous flaps using remote photoplethysmography

Intraoperative monitoring of free flaps using remote photoplethysmography (rPPG) signal provides objective and reproducible data on tissue perfusion. We developed a monitoring algorithm for flap perfusion, which was evaluated in fifteen patients...

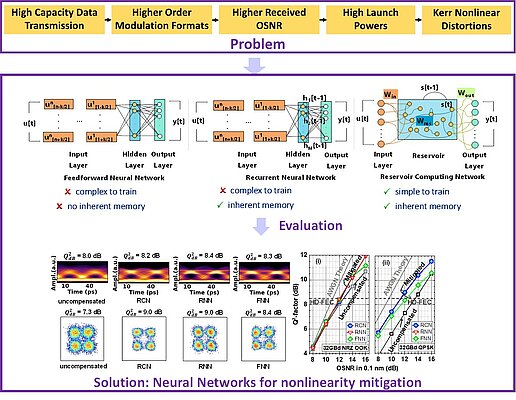

Investigating the Performance and Suitability of Neural Network Architectures for Nonlinearity Mitigation of Optical Signals

We compare three different neural network architectures for nonlinearity mitigation of 32 GBd OOK and QPSK signals after transmission over a dispersion-compensated link of 10-km SSMF and 10-km DCF. OSNR gains up to 2.2 dB were achieved using...

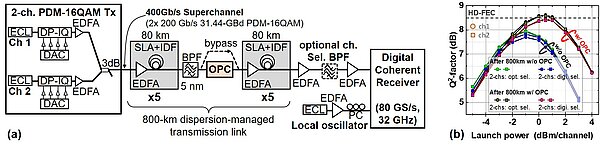

Experimental Study of In-line Nonlinearity Mitigation for a 400 Gb/s Dual-Carrier Superchannel with Joint Reception Using a Waveband-Shift-Free OPC

We experimentally study waveband-shift-free optical phase conjugation (OPC) of a 400-Gb/s dual-carrier superchannel and discuss the effectiveness of the OPC for multi-channel nonlinear mitigation. After joint reception of the 31.44 GBd-PDM-16QAM...

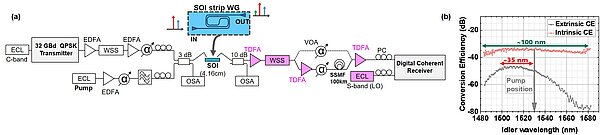

Ultra-Wideband All-optical Interband Wavelength Conversion Using a Low-complexity Dispersion-engineered SOI Waveguide

We experimentally present a low-complexity dispersion-engineered all-optical wavelength-converter using a photonic integrated-circuit based on SOI waveguide. We achieve a single-sided conversion bandwidth of ~35 nm from C- to S-band, and...

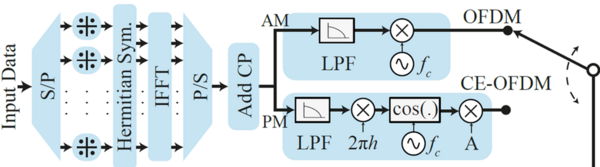

Increasing the reach of visible light communication links through constant-envelope OFDM signals

We demonstrate the transmission of constant-envelope orthogonal frequency division multiplexing (CE-OFDM) signals, based on electrical phase modulation, in a visible light communication (VLC) system. An increased tolerance to nonlinearity...

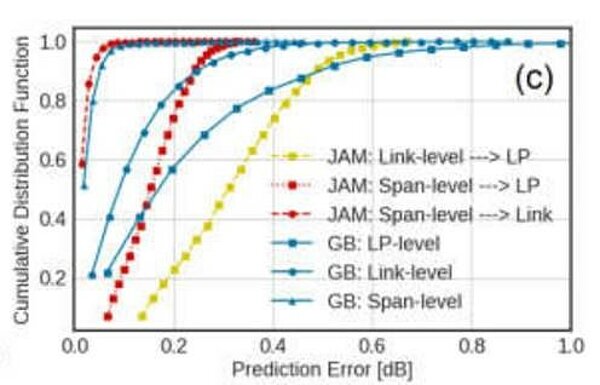

A Novel Approach for Joint Analytical and ML-assisted GSNR Estimation in Flexible Optical Network

We propose a novel approach to perform QoT estimation relying on joint exploitation of machine learning and analytical formula that offers accurate estimation when applied to scenarios with heterogeneous span profiles and sparsely occupied links....

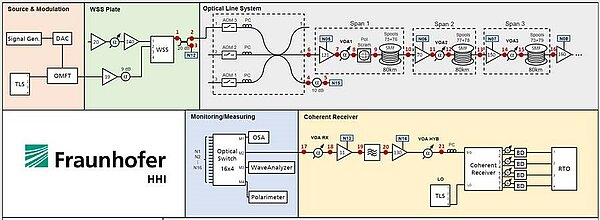

Automated Dataset Generation for QoT Estimation in Coherent Optical Communication Systems

We demonstrate sophisticated laboratory automation and data pipeline capable of generating large, diverse, and high-quality public datasets. The demo covers the full workflow from setup reconfiguration to data monitoring and storage, represented...

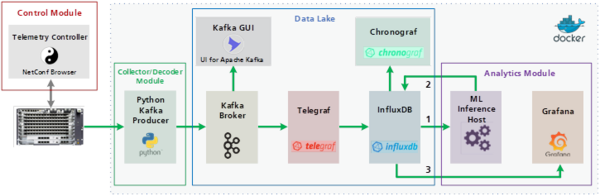

Demonstration of a Real-Time ML Pipeline for Traffic Forecasting in AI-Assisted F5G Optical Access Networks

We showcase a proof-of-concept demonstration of a ML pipeline for real-time traffic forecasting deployed on a passive optical access network using an XGS-PON compatible telemetry framework. The demonstration reveals the benefits of fine-granular...

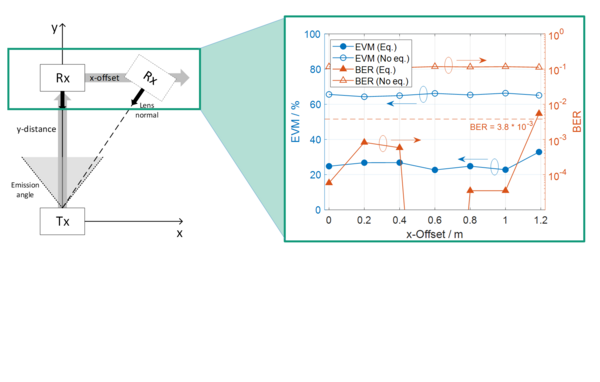

Demonstration of 1.75 Gbit/s VCSEL-Based Non-Directed Optical Wireless Communications With OOK and FDE

We evaluate a high power on-off-keying transmitter for non-directed optical wireless communications based on VCSEL-arrays. Error-free transmission after FEC with a net data rate of 1.75 GBit/s is achieved across a distance of 2.5 m with a...