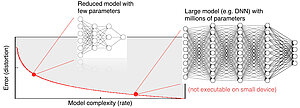

Deep neural networks are an invaluable prediction tool. However, their dependence on graphic processing units and their digestion of large volumes of data precludes their use on mobile devices and embedded systems. In ReducedML, the reduction of machine learning models (especially deep neural networks) is explored theoretically, algorithmically, and practically. Specifically, superfluous model parameters are removed while preserving generalization errors and the robustness of learning machines. The resulting model has higher computational efficiency and—of equal importance—the output is easier to analyze and interpret. For more information, consult Innovisions.

Project partner:

Max Planck Institute for Intelligent Systems

Publications:

Ehmann, C., Samek, W. (2018). Transferring information between neural networks. IEEE ICASSP, 2361–2365.

Sattler, F., Wiedemann, S., Müller, K.-R., Samek, W. (2018). Sparse binary compression: Towards distributed deep learning with minimal communication. Preprint at arXiv:1805.08768.

Wiedemann, S., Marban, A., Müller, K.-R., Samek, W. (2018). Entropy-constrained training of deep neural networks. Preprint at arXiv:1812.07520.

Wiedemann, S., Müller, K.-R., Samek, W. (2018). Compact and computationally efficient representation of deep neural networks. Preprint at arXiv:1805.10692.