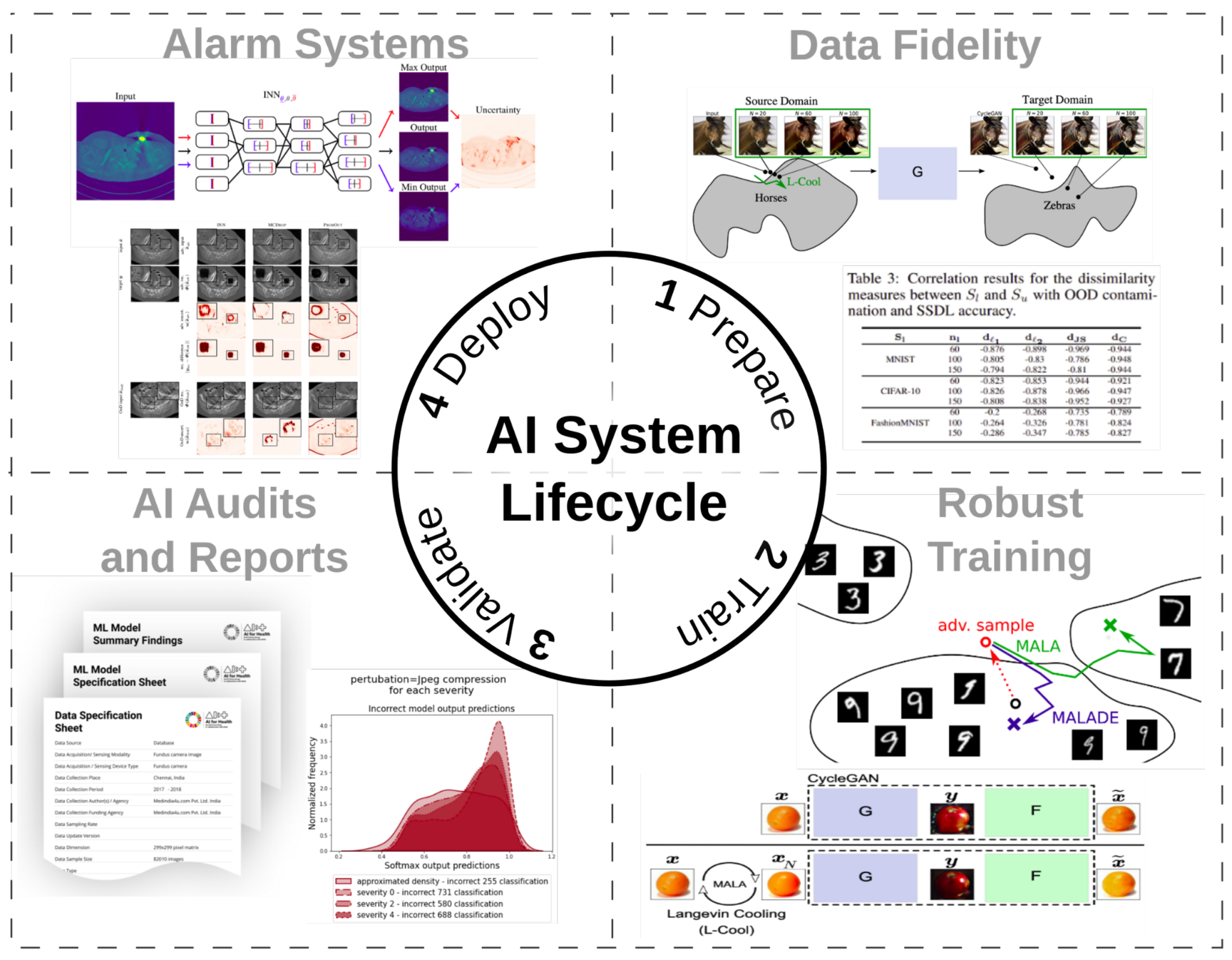

Modern AI systems based on deep learning, reinforcement learning or hybrids thereof constitute a flexible, complex and often opaque technology. Their design typically follows a common lifecycle of preparing the data and optimization problem, training the AI system, a validation step and potentially deployment under suitable monitoring.

Limits in the understanding of an AI system’s behavior at each of these steps in the lifecycle can incur risks for system failure: How well does the AI system generalize to environments that differ from the training setting? Which environments should be selected for robustness tests? How confident are AI system predictions? How can common failure modes be defined, detected and reported?

We work along the entire lifecycle on the design of methods, processes and standardization towards machine learning technology that can be trusted for use in real-world applications. We develop algorithms to improve the fidelity of input data4 and the robustness of deep neural networks5, 6, 7. We lead initiatives in intergovernmental bodies like the World Health Organization and the International Telecommunication Union to develop standards for AI validation and auditing2, a, b, c. New methods, such as Interval Neural Networks1,3, that help to identify failure modes during AI deployment are being developed by our group.

The Fraunhofer Heinrich Hertz Institute (HHI), together with TÜV Association and the Federal Office for Information Security (BSI) have published 2 jointly developed whitepapers. The first whitepaper published in May 2021, is entitled "Towards Auditable AI Systems: Current status and future directions" and outlines a roadmap to examine artificial intelligence (AI) models throughout their entire lifecycle. The second whitepaper published in May 2022, is entitled "Towards Auditable AI Systems: From Principles to Practice" and proposes to employ a newly developed “Certification Readiness Matrix” (CRM) and presents its initial concept.

You can find a detailed list of our work in the references below. If you have questions or would like to learn about opportunities for collaboration such as research projects or student theses please get in touch.

Publications

Conferences and Journals

- Jan Macdonald, Maximilian März, Luis Oala, and Wojciech Samek:

Interval Neural Networks as Instability Detectors for Image Reconstructions,

Bildverarbeitung für die Medizin - Algorithmen - System - Anwendungen, 2021 - Luis Oala, Jana Fehr, Luca Gilli, Pradeep Balachandran, Alixandro Werneck Leite, Saul Calderon-Ramirez, Danny Xie Li, Gabriel Nobis, Erick Alejandro Munoz Alvarado, Giovanna Jaramillo-Gutierrez, Christian Matek, Ferath Kherif, Bruno Sanguinetti, Thomas Wiegand:

ML4H Auditing: From Paper to Practice,

Proceedings of the Machine Learning for Health NeurIPS Workshop, virtual event, pp. 280-317. PMLR, 2020 - Luis Oala, Cosmas Heiß, Jan Macdonald, Maximilian März, Wojciech Samek, and Gitta Kutyniok:

Detecting Failure Modes in Image Reconstructions with Interval Neural Network Uncertainty,

Proceedings of the ICML'20 Workshop on Uncertainty & Robustness in Deep Learning, 2020 - Vignesh Srinivasan, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima:

Benign Examples: Imperceptible changes can enhance image translation performance,

Proceedings of the Thirty-Fourth Association for the Advancement of Artificial Intelligence (AAAI) 2020 - Vignesh Srinivasan, Ercan E. Kuruoglu, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima:

Black-Box Decision based Adversarial Attack with Symmetric α-stable Distribution,

Proceedings of the European Signal Processing Conference (EUSIPCO), 2019 - Vignesh Srinivasan, Arturo Marban, Klaus-Robert Müller, Wojciech Samek, Shinichi Nakajima:

Defense Against Adversarial Attacks by Langevin Dynamics,

ICML Workshop on Uncertainty & Robustness in Deep Learning 2019 - Vignesh Srinivasan, Serhan Gül, Sebastian Bosse, Jan Timo Meyer, Thomas Schierl, Cornelius Hellge and Wojciech Samek:

On the robustness of action recognition methods in compressed and pixel domain,

Proceedings of the European Workshop on Visual Information Processing (EUVIP), Marseille, France, pp. 1-6, October 2016 - Saul Calderon-Ramirez and Luis Oala:

More Than Meets The Eye: Semi-supervised Learning Under Non-IID Data,

ICLR 2021 Workshop on Robust and Reliable Machine Learning in the Real World (RobustML), 2021 - Kurt Willis and Luis Oala:

Post-Hoc Domain Adaptation via Guided Data Homogenization,

ICLR 2021 Workshop on Robust and Reliable Machine Learning in the Real World (RobustML), 2021

Standardization

- Pradeep Balachandran, Federico Cabitza, Saul Calderon Ramirez, Alexandre Chiavegatto Filho, Fabian Eitel, Jérôme Extermann, Jana Fehr, Stephane Ghozzi, Luca Gilli, Giovanna Jaramillo-Gutierrez, Quist-Aphetsi Kester, Shalini Kurapati, Stefan Konigorski, Joachim Krois, Christoph Lippert, Jörg Martin, Alberto Merola, Andrew Murchison, Sebastian Niehaus, Luis Oala, Kerstin Ritter, Wojciech Samek, Bruno Sanguinetti, Anne Schwerk, Vignesh Srinivasan:

Data and artificial intelligence assessment methods (DAISAM) reference,

ITU/WHO FG-AI4H-I-035, Geneva, ed. Luis Oala, Switzerland, May 2020 - Christian Johner, Pradeep Balachandran, Peter. G. Goldschmidt, Sven Piechottka, Andrew Murchison, Pat Baird, Zack Hornberger, Juliet Rumball-Smith, Christoph Molnar, Alixandro Werneck Leite, Aaron .Y. Lee, Shan Xu, Anle Lin, Luis Oala:

Good practices for health applications of machine learning: Considerations for manufacturers and regulators,

ITU/WHO FG-AI4H-I-036, Geneva, eds. Luis Oala, Christian Johner, Pradeep Balachandran, Switzerland, May 2020 - Wojciech Samek, Vignesh Srinivasan, Luis Oala, Thomas Wiegand:

Robustness - Safety and reliability in AI4H,

ITU/WHO FG-AI4H-E-025, Geneva, Switzerland, May 2019