XAI methods aim at providing transparency to the prediction making of ML models, e.g., for the validation of predictions for expert users, or the identification of failure modes. While Local XAI provides interpretable feedback on individual predictions of the model, and assesses the importance of input features w.r.t. specific samples. On the other hand, global XAI aims at obtaining a general understanding about a model’s sensitivities, learned features and concept encodings.

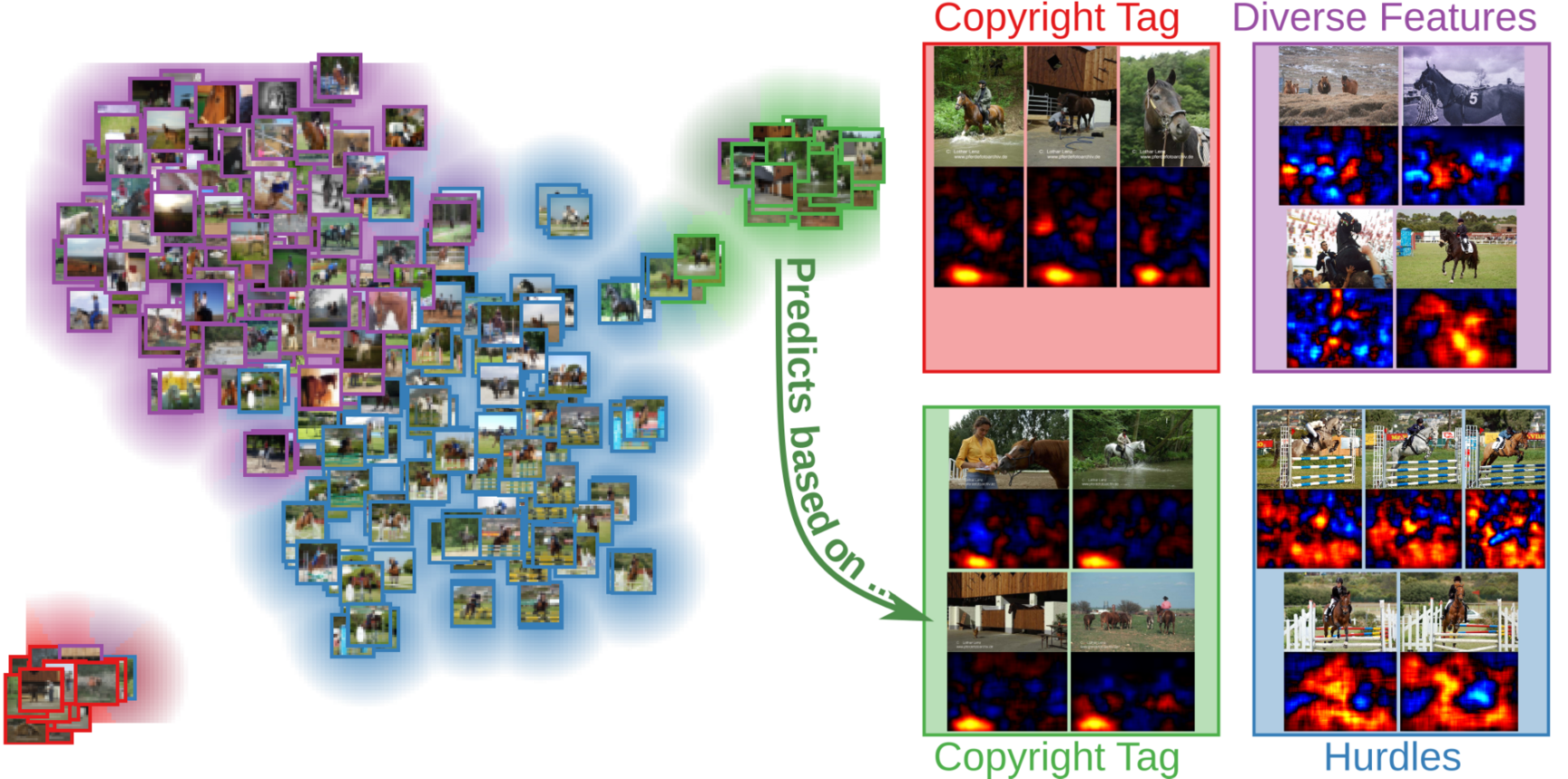

Both ends of the XAI spectrum suffer from a (human) investigator bias during analysis and thus are on their own of only limited use for searching and exploring for biases, spurious correlations and errors learned by the model from the training data. Global XAI can only measure the impact of pre-determined or expected features or effects, which limits its capability of exploring unknown behavioral facets of a model. Local XAI, on the other hand, has the potential to provide much more detailed information per sample, but the task of compiling information about model behavior over thousands (or even millions) of samples and explanations is a tiring and laborious process and presumes a deep understanding of the data domain. Our recent technique, the Spectral Relevance Analysis (SpRAy), aims at bridging the gap between local and global XA, by introducing automation into the analysis of large sets of local explanations.

Using SpRAy, we for example found that a state-of-the-art machine learning model trained for image classification on the widely-used and popular PASCAL VOC benchmark dataset, rather than relying on the "horse" object present in the image for classifying data points from this category, was instead relying on a spurious copyright watermark present in the images1, 2 representing class "horse".

Similar insights have also been discovered in by far larger, contemporary datasets, such as ImageNet3, ISIC 2019 and others4. Being able to detect such an undesirable model behavior has great practical implications, as it, e.g., enables stakeholders to efficiently rectify the model behavior with high precision, and thus minimize the model's vulnerability to malicious attacks5.

Publications

- Christopher J. Anders, David Neumann, Talmaj Marinc, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin (2020):

XAI for Analyzing and Unlearning Spurious Correlations in ImageNet,

Vienna, Austria, ICML'20 Workshop on Extending Explainable AI Beyond Deep Models and Classifiers (XXAI), July 2020 - Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller (2019):

Unmasking Clever Hans Predictors and Assessing What Machines Really Learn,

Nature Communications, vol. 10, London, UK, Nature Research, p. 1096, DOI: 10.1038/s41467-019-08987-4, March 2019 - Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin (2022):

Finding and removing Clever Hans: Using explanation methods to debug and improve deep models,

Information Fusion, Volume 77, Pages 261-295, ISSN 1566-2535, 2022