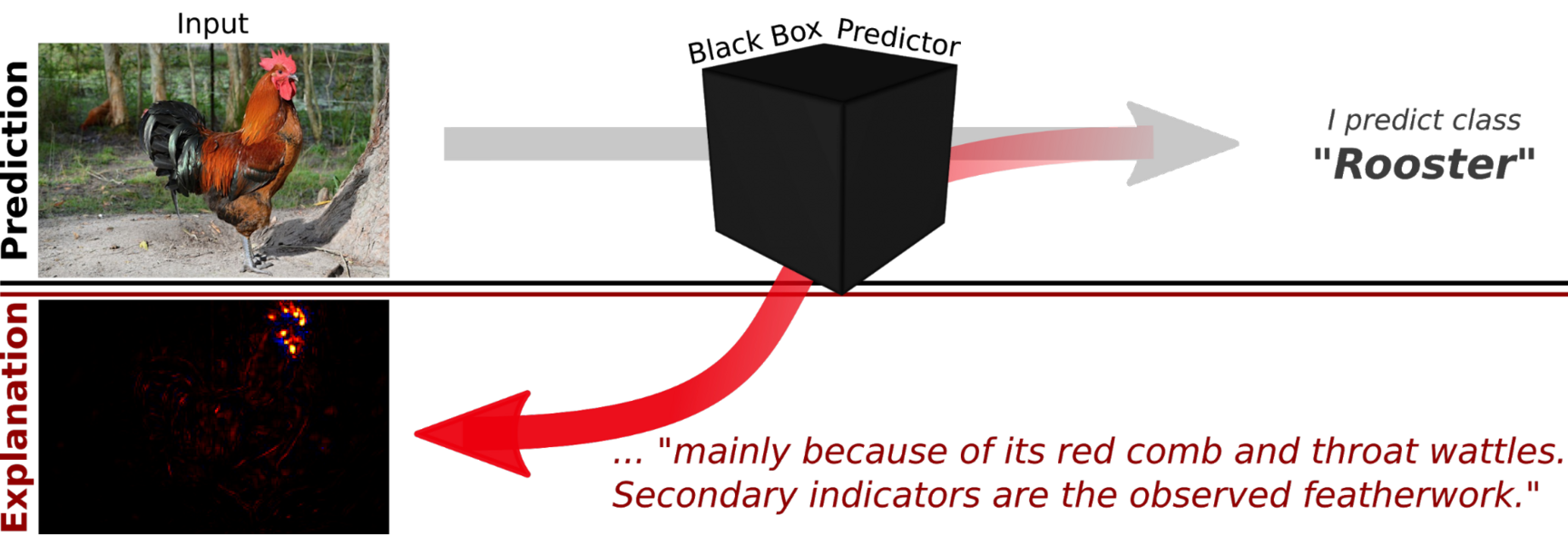

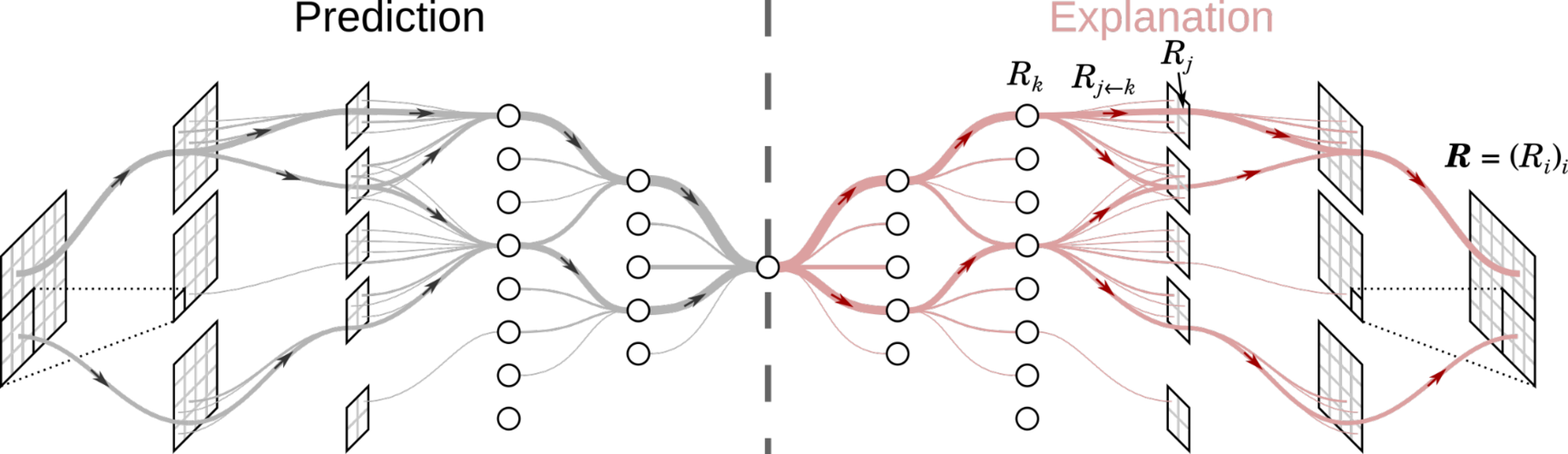

The research of the eXplainable AI group fundamentally focuses on the algorithmic development of methods to understand and visualize the predictions of state-of-the-art AI models. In particular our group, together with collaborators from TU Berlin and the University of Oslo, introduced in 2015 a new method to explain the predictions of deep convolutional neural networks (CNNs) and kernel machines (SVMs) called Layer-wise Relevance Propagation (LRP)1. This method has since been extended to other types of AI models such as Recurrent Neural Networks (RNNs)2, One-Class Support Vector Machines3, and K-Means clustering4. The LRP method is based on a relevance conservation principle and leverages the structure of the model to decompose its prediction.

First, a standard forward pass through the model is performed, with which the network predicts. Then, the model's output is backward propagated layer-by-layer, following the neural pathways involved in the final prediction, by applying specific LRP decomposition rules. The result is a heatmap indicating the contribution of individual input features (e.g., of pixels) to the prediction, which can be computed for any hypothetically possible prediction. LRP has a theoretical justification which can be rooted in Taylor decomposition5. Besides the development of new explanation methods our group also investigates quantitative methods to evaluate and validate explanations, e.g. via pixel-perturbation analysis6 or controlled tasks with ground truth annotations7.

To explore the capabilities of LRP in various data domains and problem scales in action, visit our interactive demos.

Further, LRP constitutes a reliable basis in the exploration of semi-automated techniques to inspect explanations at large scale and identify undesirable behaviors of machine learning models (so-called Clever-Hans behaviors) with Spectral Relevance Analysis8, with the intent to ultimately un-learn such behaviors9 and functionally clean the model.

Publications

- Wojciech Samek, Leila Arras, Ahmed Osman, Grégoire Montavon, Klaus-Robert Müller (2021):

Explaining the Decisions of Convolutional and Recurrent Neural Networks,

In: Mathematical Aspects of Deep Learning , pp. 1-33, Cambridge University Press, Cambridge, UK - Grégoire Montavon, Sebastian Lapuschkin, Alexander Binder, Wojciech Samek, Klaus-Robert Müller (2017):

Explaining Nonlinear Classification Decisions with Deep Taylor Decomposition,

Pattern Recognition, Elsevier Inc., vol. 65, p. 211–222, DOI: 10.1016/j.patcog.2016.11.008, May 2017 - Leila Arras, José Arjona-Medina, Michael Widrich, Grégoire Montavon, Michael Gillhofer, Klaus-Robert Müller, Sepp Hochreiter, Wojciech Samek (2019):

Explaining and Interpreting LSTMs,

In: Lars Kai Hansen, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek, Andrea Vedaldi, Explainable AI: Interpreting, Explaining and Visualizing Deep Learning (Lecture Notes in Computer Science) , pp. 211-238, Springer International Publishing, Springer Nature Switzerland, vol. 11700, DOI: 10.1007/978-3-030-28954-6_11, August 2019 - Wojciech Samek, Thomas Wiegand, Klaus-Robert Müller (2018):

Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models,

ITU Journal: ICT Discoveries - Special Issue 1 - The Impact of Artificial Intelligence (AI) on Communication Networks and Services, vol. 1, no. 1, pp. 39-48, March 2018 - Leila Arras, Franziska Horn, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek (2017):

"What is Relevant in a Text Document?": An Interpretable Machine Learning Approach,

Open Access journal - PLOS ONE (Public Library of Science), Cambridge, vol. 12, no. 8, United Kingdom, p. E0181142, DOI: 10.1371/journal.pone.0181142, August 2017 - Wojciech Samek, Alexander Binder, Grégoire Montavon, Sebastian Lapuschkin, Klaus-Robert Müller (2017):

Evaluating the visualization of what a Deep Neural Network has learned,

IEEE Transactions on Neural Networks and Learning Systems, vol. 28, no. 11, pp. 2660-2673, DOI: 10.1109/tnnls.2016.2599820, November 2017 - Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller, Wojciech Samek (2015):

On Pixel-wise Explanations for Non-Linear Classifier Decisions by Layer-wise Relevance Propagation,

PLOS ONE, vol. 10, no. 7, p. e0130140, DOI: 10.1371/journal.pone.0130140, July 2015 - Sebastian Lapuschkin, Alexander Binder, Grégoire Montavon, Klaus-Robert Müller, Wojciech Samek (2016):

Analyzing Classifiers: Fisher Vectors and Deep Neural Networks,

Las Vegas, Nevada, USA, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), paper, pp. 2912-2920, DOI: 10.1109/cvpr.2016.318, June 2016 - Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin (2022)

Finding and removing Clever Hans: Using explanation methods to debug and improve deep models

Information Fusion