In recent years, Deep Neural Networks have gained increased popularity, as they are able to achieve impressive performances on a growing variety of applications. These networks are usually trained using extremely large datasets, which often contain various unnoticed artifacts and spurious correlations that can serve as a seemingly easy workaround for far more complex relations. Consequently, the predictor may not learn a valid and fair strategy to solve the task at hand, and instead make biased decisions. When training and testing data are distributed equally - which is usually the case as they are often taken from the same data corpus - the same biases are present within both sets, leading to a severe overestimation of the model’s reported generalization ability.

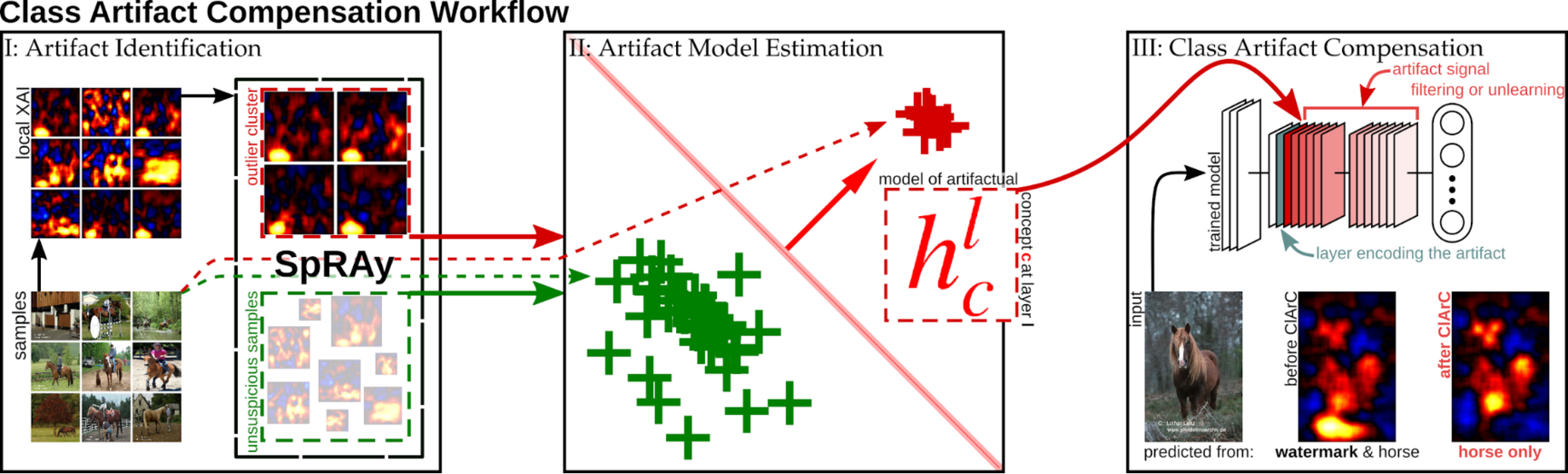

In our recent collaborative work, together with the Machine Learning Group of the TU Berlin, we propose Class Artifact Compensation (ClArC) as a tool for mitigating the influence of specific artifacts on a predictor’s decision-making and for thereby enabling a more accurate estimation of its generalization ability. As such, ClArC describes a relatively general three-step framework for artifact removal, consisting of identifying artifacts, estimating a model for a specific artifact and finally augmenting the predictor in order to compensate for that artifact.

Due to its ability to automatically analyze large sets of explanations, we employed our LRP-based SpRAy method to identify artifacts in practice. With different user objectives in mind, we developed multiple techniques for the artifact compensation step:

- Augmentative ClArC [2], which fine-tunes a model based on augmentations in feature space in order to shift its focus away from a specific confounder,

- Right Reason ClArC [3], which enables unlearning of model biases by directly penalizing latent feature reliance via gradients and further allows for class-specific unlearning,

- Projective ClArC [2], which is extremely resource-efficient, based on projections in feature space during inference to mitigate the confounder’s influence

- Reactive ClArC [4], which introduces conditions for applying Projective ClArC to reduce the impact of projections in feature space on model performance.

Publications

| [1] | Christopher J. Anders, David Neumann, Talmaj Marinč, Wojciech Samek, Klaus-Robert Müller, and Sebastian Lapuschkin. “XAI for Analyzing and Unlearning Spurious Correlations in ImageNet”. In: 2020 International Conference on Machine Learning. ICML. XXAI: Extending Explainable AI Beyond Deep Models and Classifiers (July 17, 2020). Fraunhofer Institute for Telecommunications, Heinrich-Hertz-Institut, HHI et al. July 7, 2020. URL: http://interpretable-ml.org/icml2020workshop/pdf/11.pdf (visited on 09/18/2024). Workshop papers not published in official proceedings. |

| [2] | Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, and Sebastian Lapuschkin. “Finding and removing Clever Hans. Using explanation methods to debug and improve deep models”. In: Information Fusion 77 (Aug. 3, 2021), pp. 261–295. ISSN: 1566-2535. DOI: 10.1016/j.inffus.2021.07.015. |

| [3] | Maximilian Dreyer, Frederik Pahde, Christopher J. Anders, Wojciech Samek, and Sebastian Lapuschkin. “From Hope to Safety. Unlearning Biases of Deep Models via Gradient Penalization in Latent Space”. In: Proceedings of the AAAI Conference on Artificial Intelligence. The 38th Annual AAAI Conference on Artificial Intelligence. AAAI (Vancouver Convention Centre – West Building, Canada Pl, Vancouver, BC V6C 3G3, Feb. 20–27, 2024). Vol. 38. 19. Association for the Advancement of Artificial Intelligence. 1101 Pennsylvania Ave, NW, Suite 300, Washington, DC 20004, United States of America: AAAI Press, Mar. 24, 2024, pp. 21046–21046. ISBN: 978-1-57735-887-9. DOI: 10.1609/aaai.v38i19.30096. |

| [4] | Dilyara Bareeva, Maximilian Dreyer, Frederik Pahde, Wojciech Samek, and Sebastian Lapuschkin. “Reactive Model Correction. Mitigating Harm to Task-Relevant Features via Conditional Bias Suppression”. In: 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Proceedings. 2024 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. CVPRW (Seattle, Washington, United States of America, June 17–18, 2024). IEEE Computer Society. 3 Park Avenue, 17th Floor, New York City, New York, United States of America: Institute of Electrical and Electronics Engineers (IEEE), Sept. 27, 2024, pp. 3532–3541. ISBN: 979-8-3503-6547-4. DOI: 10.1109/CVPRW63382.2024.00357. |