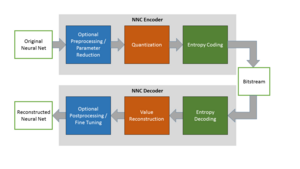

In 2022, the new standard for Neural Network Coding (NNC - officially issued as ISO/IEC 15938-17:2022) was released by the international standardization group of ISO/IEC MPEG. NNC provides neural networks to be compressed to less than 5% of their original size, without degrading inference capabilities, i.e. maintaining accuracies of the original uncompressed nets. NNC includes a set of preprocessing methods, i.e. Pruning, Sparsification or Low-Rank Decomposition for neural nets. The main coding engine includes efficient quantization methods and DeepCABAC as arithmetic coding tool.

Fraunhofer HHI has also released NNCodec, an easy-to-use NNC software implementation. More information can be found here.

For NNC standard and NNCodec software development, our Video Coding Technologies Group jointly cooperates with the Efficient Deep Learning Group within the ISO/IEC MPEG Video Standardization Group (ISO/IEC JTC1/SC29/WG04). Here, we contributed significant parts of the core coding engine, including the arithmetic coding DeepCABAC and a number of quantization tools, like uniform nearest-neighbor and dependent quantization. Further tools include arithmetic coding optimization, batch norm folding and local scaling. Also we developed and contributed a number of high-level syntax methods, like tensor-wise decoding methods, random access, improved codebook signaling and parallel processing with enhanced CABAC optimization, and finally specific syntax for bitstream definition with external frameworks, namely TensorFlow, PyTorch, ONNX and NNEF.

Since 2021, the work on Neural Network Coding was extended to include efficient coding of incremental neural network update data, as used in distributed scenarios, like federated learning. For this, parameter-wise updates of neural networks are continuously sent. As a result, a 2nd edition of the NNC standard, i.e. ISO/IEC 15938-17ed2 is specified. For NNC ed2, the compression efficiency was further increased by adding additional coding tools, e.g. structured sparsification and specific temporal adaptation in DeepCABAC. NNC v2 adresses different application scenarios, including entire or partial neural network updates, federated, transfer and update learning with synchronous and asynchronous communication pattern.

Scientific Publications

-

K. Müller, W. Samek, D. Marpe: „Ein internationaler KI-Standard zur Kompression Neuronaler Netze“, FKT- Fachzeitschrift für Fernsehen, Film und Elektronische Medien, pp. 33-36, Aug./Sept. 2021.

-

H. Kirchhoffer, et al. "Overview of the Neural Network Compression and Representation (NNR) Standard", IEEE Transactions on Circuits and Systems for Video Technology, pp. 1-14, July 2021, doi: 10.1109/TCSVT.2021.3095970, Open Access

-

P. Haase, H. Schwarz, H. Kirchhoffer, S. Wiedemann, T. Marinc, A. Marban, K. Müller, W. Samek, D. Marpe, T. Wiegand. “Dependent Scalar Quantization for Neural Network Compression.” International Conference on Image Processing 2020.

-

S. Wiedemann et al., "DeepCABAC: A universal compression algorithm for deep neural networks," in IEEE Journal of Selected Topics in Signal Processing, doi: 10.1109/JSTSP.2020.2969554.

- S. Wiedemann, H. Kirchhoffer, S. Matlage, P. Haase, A. Marban, T. Marin, D. Neumann, A. Osman, D. Marpe, H. Schwarz, T. Wiegand, and W. Samek, “DeepCABAC: context-adaptive binary arithmetic coding for deep neural network compression,” in Proceedings of the 36th International Conference on Machine Learning (ICML). Long Beach, California, US, June 2019.