Neural Network Coding

Efficient coding of neural networks has become an important topic for international standardization bodies, like ISO/IEC MPEG in order to provide billions of people with standardized neural network coding tools for fast and interoperable deep learning solutions.

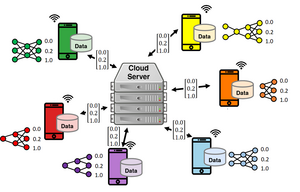

Federated Learning

Federated learning combines distributed learning methods across many devices with privacy protection of individual participants. This is achieved through specific communication protocols, such that private data never leaves a local device.

Distillation and Architecture Evolution

In federated distillation systems, clients train highly task-adaptive neural networks even with different, individual topologies beyond classical federated learning systems. This provides local participants much more data privacy and corruption-robust overall network training.

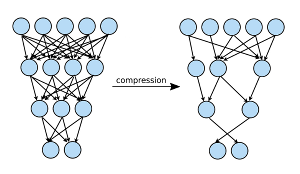

Neural Network Reduction and Optimization

State-of-the-art neural networks have millions of parameters and require extensive computational resources. Our research focuses on the development of techniques for complexity reduction, efficient coding and increased execution efficiency of these models.