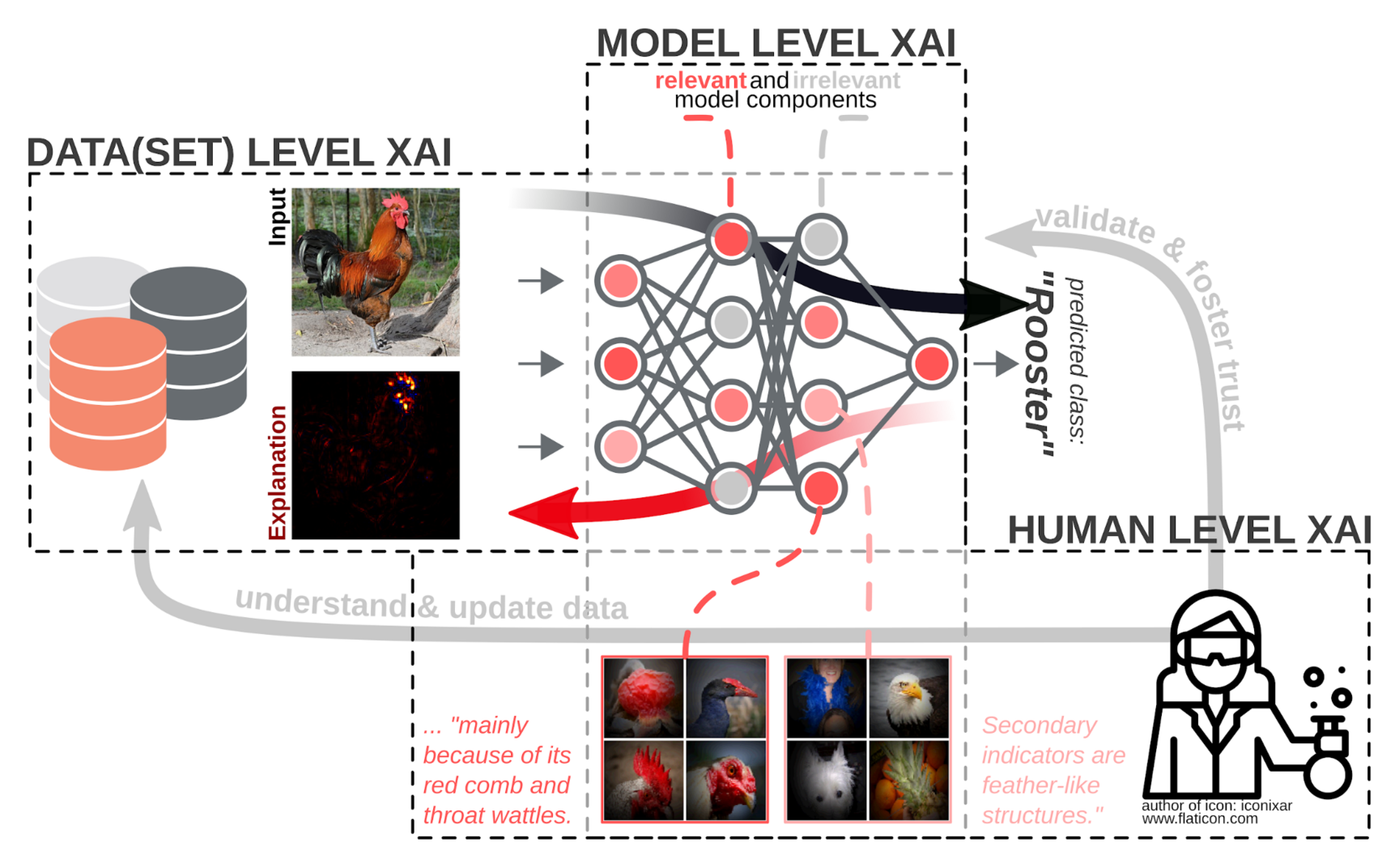

The current state of the art in eXplainable AI in most cases only marks salient features in data space. That is, local XAI methods mark the features and feature dimensions most dominantly involved in the decision making of a model per data point. Global XAI approaches conversely aim to identify and visualize the features in the data the model as a whole is most sensitive to. Both approaches work well on e.g., photographic images, where no specialized domain expertise is required for understanding and interpreting features. However, once domain expertise is lacking, the data itself might become hard to understand for humans, or the principle of the model’s information processing is not interpretable, both local and global XAI cease to be informative.

The XAI group is actively developing solutions and tools combining XAI on dataset level, e.g., explanatory heatmaps over pixels, with model level XAI identifying the role and function of internal model components during inference. We are bringing local and global XAI closer towards human-understandable and semantically enriched explanations which do require (significantly) less expert knowledge w.r.t. data domain and modus operandi of the model, which in turn will be a game-changer in human-AI interaction for retrospective and prospective decision analysis.

Publications

| [1] | Vignesh Srinivasan, Sebastian Lapuschkin, Cornelius Hellge, Klaus-Robert Müller, and Wojciech Samek. “Interpretable human action recognition in compressed domain”. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing. Proceedings. 42nd IEEE International Conference on Acoustics, Speech and Signal Processing. ICASSP 2017 (Hilton New Orleans Riverside Hotel, New Orleans, Louisiana, United States of America, Mar. 5–9, 2017). IEEE Signal Processing Society. 3 Park Avenue, 17th Floor, New York City, New York, United States of America: Institute of Electrical and Electronics Engineers (IEEE), June 19, 2017, pp. 1692–1696. ISBN: 978-1-5090-4117-6. DOI: 10.1109/ICASSP.2017.7952445. |

| [2] | Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, and Klaus-Robert Müller. “Unmasking Clever Hans predictors and assessing what machines really learn”. In: Nature Communications 10.1, 1096 (Mar. 11, 2019), pp. 1–8. ISSN: 2041-1723. DOI: 10.1038/s41467-019-08987-4. |

| [3] | Reduan Achtibat, Maximilian Dreyer, Ilona Eisenbraun, Sebastian Bosse, Thomas Wiegand, Wojciech Samek, and Sebastian Lapuschkin. “From attribution maps to human-understandable explanations through Concept Relevance Propagation”. In: Nature Machine Intelligence 5.9 (Sept. 1, 2023), pp. 1006–1019. ISSN: 2522-5839. DOI: 10.1038/s42256-023-00711-8. |