“Federated Learning is a machine learning setting where multiple entities collaborate in solving a learning problem, without directly exchanging data. The Federated training process is coordinated by a central server.”

Federated learning allows multiple parties to jointly train a neural network on their combined data, without having to compromise the privacy of any of the participants. This is achieved by repeating multiple communication rounds of the following three step protocol:

- First, the server selects a subset of the entire client population to participate in this communication round and communicates a common model initialization to these clients.

- Next, the selected clients compute an update to the model initialization using their private local data.

- Finally, the participating clients communicate their model updates back to the server where they are aggregated (by e.g. an averaging operation) to create a new master model which is used as the initialization point of the next communication round.

Since private data never leaves the local devices, federated learning can provide strong privacy guarantees to the participants. These guarantees can be made rigorous by applying encryption techniques to the communicated parameter updates or by concealing them with differentially private mechanisms. Since in most federated learning applications the training data on a given client is generated based on the specific environment or usage pattern of the sensor, the distribution of data among the clients will usually be “non-iid” meaning that any particular user’s local data set will not be representative of the whole distribution. The amount of local data is also typically unbalanced among clients, since different users may make use of their device or a specific application to different extent. In many scenarios the total number of clients participating in the optimization is much larger than the average number of training data examples per client. The intrinsic heterogeneity of client data in federated learning introduces new challenges when it comes to designing (communication efficient) training algorithms.

Communication Efficient Federated Learning

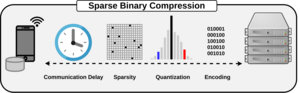

A major issue in federated learning is the massive communication overhead that arises from sending around the model updates. When naively following the federated learning protocol, every participating client has to download and upload a full model during every training iteration. Every such update is of the same size as the full model, which can be in the range of gigabytes for modern architectures with millions of parameters. At the same time, mobile connections are often slow, expensive and unreliable, aggravating the problem further. To counteract this development we have proposed sparse binary compression (SBC), a compression framework that allows for a drastic reduction of communication cost for distributed training. SBC combines techniques of communication delay, gradient sparsification and quantization with optimal weight update encoding to maximize compression gains. The method also allows to smoothly trade-off gradient sparsity and temporal sparsity to adapt to the requirements of the learning task. Our experiments show, that SBC can reduce the upstream communication by more than four orders of magnitude without significantly harming the convergence speed in terms of forward-backward passes.

Data Heterogeneity and Personalization in Federated Learning

Federated Learning operates under the assumption that it is possible for one single model to fit all client’s data generating distributions at the same time. In many situation however, due to the heterogeneity of data inherent to most Federated Learning tasks, this approach will yield sub-optimal results. Federated Multi-Task Learning (FMTL) addresses this problem, by providing every client with a personalized model that optimally fits it’s local data distribution.

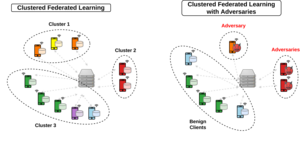

Clustered Federated Learning (CFL), is a Federated Multi-Task Learning framework, which exploits geometric properties of the FL loss surface, to group the client population into clusters with jointly trainable data distributions. In contrast to existing FMTL approaches, CFL does not require any modifications to the FL communication protocol to be made, is applicable to general non-convex objectives (in particular deep neural networks) and comes with strong mathematical guarantees on the clustering quality. CFL is flexible enough to handle client populations that vary over time and can be implemented in a privacy preserving way. As clustering is only performed after Federated Learning has converged to a stationary point, CFL can be viewed as a post-processing method that will always achieve greater or equal performance than conventional FL by allowing clients to arrive at more specialized models.

Clustered Federated Learning can also by applied to “byzantine” settings, where a subset of client population behaves unpredictably or tries to disturb the joint training effort in an directed or undirected way. In these settings CFL is able to reliably detect byzantine clients and remove them from training by separating into different clusters.

Scientific Publications

- F Sattler, S Wiedemann, KR Müller, W Samek, "Sparse Binary Compression: Towards Distributed Deep Learning with minimal Communication", Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN), 1-8, 2019.

- F Sattler, S Wiedemann, KR Müller, W Samek, "Robust and Communication-Efficient Federated Learning from Non-IID Data",IEEE Transactions on Neural Networks and Learning Systems, 2019.

- F Sattler, KR Müller, W Samek, "Clustered Federated Learning: Model-Agnostic Distributed Multi-Task Optimization under Privacy Constraints", IEEE Transactions on Neural Networks and Learning Systems, 2020.

- F Sattler, KR Müller, T Wiegand, W Samek, "On the Byzantine Robustness of Clustered Federated Learning",Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 8861-8865, 2020.

- F Sattler, T Wiegand, W Samek, "Trends and Advancements in Deep Neural Network Communication", ITU Journal: ICT Discoveries, 3(1), 2020.

- D Neumann, F Sattler, H Kirchhoffer, S Wiedemann, K Müller, H Schwarz, T Wiegand, D Marpe, and W Samek, "DeepCABAC: Plug and Play Compression of Neural Network Weights and Weight Updates", Proceedings of the IEEE International Conference on Image Processing (ICIP), 2020.

- F Sattler, KR Müller, W Samek, "Clustered Federated Learning",Proceedings of the NeurIPS'19 Workshop on Federated Learning for Data Privacy and Confidentiality, 1-5, 2019.