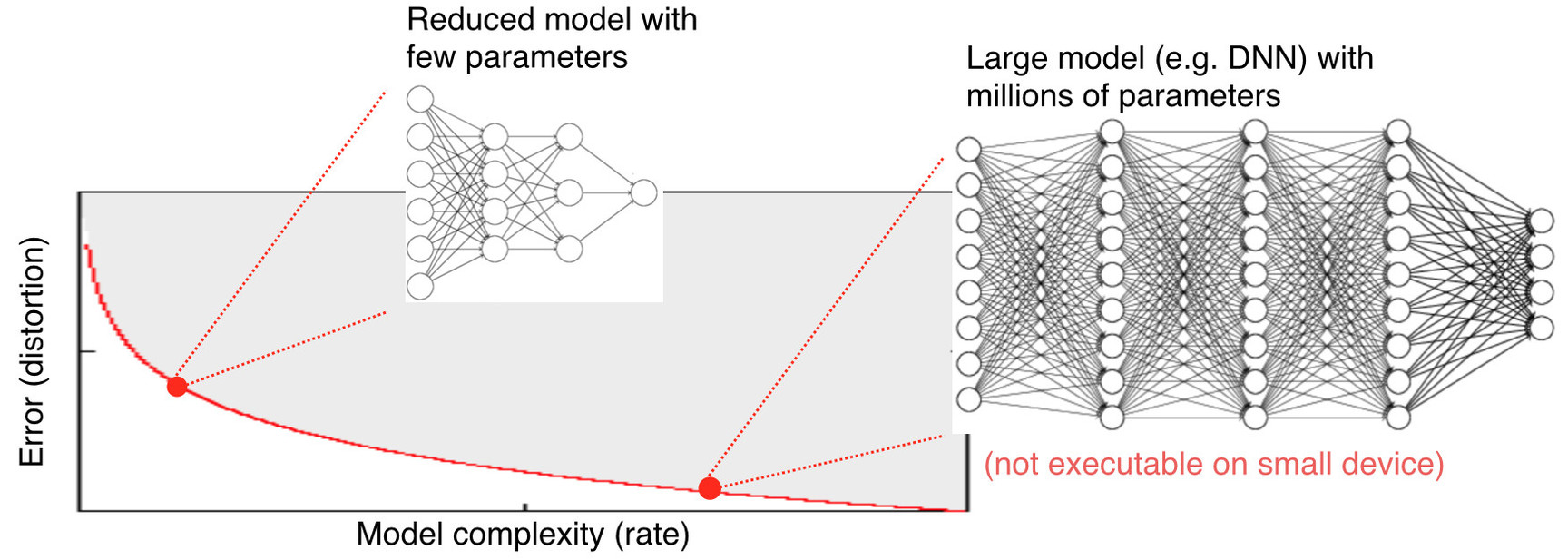

At the core of the relatively recent “AI revolution” are Deep Neural Networks (DNNs), which have demonstrated remarkable breakthroughs on a wide range of machine learning tasks such as image classification, speech recognition, object detection, natural language understanding, etc. However, state-of-the-art DNN models are usually equipped with several millions of connections, sometimes even in the order of billions, which consequently make them very resource inefficient. For instance, recent advances in natural language processing have created GPT-3, a model with 127 billions parameters which needed 285 thousand CPUs and a cluster of 10000 GPUs to be trained, whose costs amounted to roughly 12 million US dollars. Moreover, such DNN models are prohibitally large for applications where frequent communication and deployment to resource-constrained devices is required, such as autonomous cars, mobile phones, wearables, smart home devices, VR/AR glasses, etc. A huge number of applications on such embedded systems would emerge if the size of DNN models could be reduced easily at no or small performance loss.

Our research focuses on developing techniques that reduce the complexity of DNN models on all fronts: size, speed & energy consumption. We develop theoretical underpinnings that lead to practical algorithms which are able to significantly reduce the complexity of DNN models while minimally affecting the prediction performance of the models.

Our most recent research efforts focused on reducing the size of DNNs. Compressing the size of DNNs has direct positive impact in that communication as well as storage costs can be directly reduced. Moreover, smaller sized models can also have higher execution efficiency. Our research efforts led to several publications in this field to top conferences and journals [1-6]. Also, the developed technology has been submitted to ISO/IEC JTC1/SC29/WG04 (MPEG Video) and has been stadardized, as described in the research topic on International Standardization. In collaboration with our colleagues from the Video Coding group, we developed several technologies, such as inter alia DeepCABAC [1], a universal compression algorithm for deep neural networks, resulting in a high compression of neural networks down to less the 5% of their original size without degradation in inference performance and accuracy measures.

Scientific Publications

- D. Neumann, F. Sattler, H. Kirchhoffer, S. Wiedemann, K. Müller, H. Schwarz, T. Wiegand, D. Marpe, W. Samek. DeepCABAC: “Plug&Play Compression of Neural Network Weights and Weight Updates”. International Conference on Image Processing 2020.

- S. Wiedemann, T. Mehari, K. Kepp, W. Samek, “Dithered backprop: A sparse and quantized backpropagation algorithm for more efficient deep neural network training” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2020, pp. 720-721

- A. Marban, D. Becking, S. Wiedemann, W. Samek; “Learning Sparse & Ternary Neural Networks with Entropy-Constrained Trained Ternarization (EC2T)” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2020, pp. 722-723.

- S. Wiedemann, K.-R. Müller and W. Samek, "Compact and Computationally Efficient Representation of Deep Neural Networks," in IEEE Transactions on Neural Networks and Learning Systems, vol. 31, no. 3, pp. 772-785, March 2020, doi: 10.1109/TNNLS.2019.2910073.

- S. Wiedemann, A. Marban, K. Müller and W. Samek, "Entropy-Constrained Training of Deep Neural Networks," 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 2019, pp. 1-8, doi: 10.1109/IJCNN.2019.8852119.

- Seul-Ki Yeom, Philipp Seegerer, Sebastian Lapuschkin, Alexander Binder, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek, "Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning," 2021 Recognition, vol. 115, page 107899, DOI: doi.org/10.1016/j.patcog.2021.107899