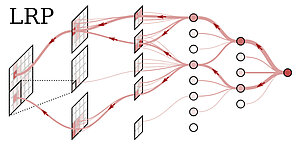

Layer-wise Relevance Propagation (LRP)

The research of the eXplainable AI group fundamentally focuses on the algorithmic development of methods to understand and visualize the predictions of state-of-the-art AI models. In particular our group, together with collaborators from TU Berlin and the University of Oslo, introduced in 2015 a new method to explain the predictions of deep convolutional neural networks (CNNs) and kernel machines (SVMs) called Layer-wise Relevance Propagation (LRP).

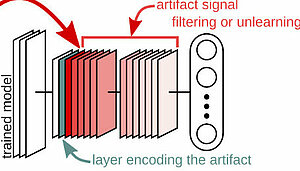

XAI Beyond Visualization

An obvious use case of XAI methods is the highlighting and visualization of relevant features in individual samples or datasets. The XAI group of Fraunhofer HHI is dedicated to putting XAI to use beyond mere visualization.

Human Understandable Explanations

When domain expertise is lacking, the data itself might become hard to understand for humans. Consequently the principle of the model’s information processing is not interpretable, as both local and global XAI cease to be informative. The XAI group is actively developing solutions and tools to create human understandable explanations for model behavior regardless of a stakeholder's domain expertise.

Trustworthy Machine Learning

As dominantly data-driven approaches, DNNs are able to learn intricate patterns from within the training data, which can later be exploited for inference on unseen data. There is, however, no guarantee that the extracted patterns result in the model solving its task "as expected" by its human designer. The (functional) opacity of DNNs renders the model effectively a "black box", and thus understanding the model's reasoning on individual predictions is not immediately possible.

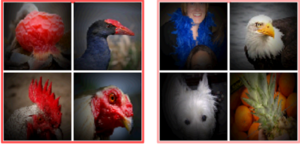

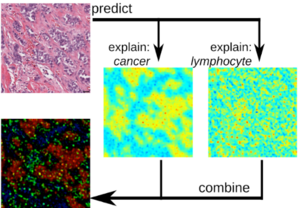

Applications of XAI

Our research on XAI has seen numerous applications in computer vision, as well as in time series predictions, and natural language processing. Besides, our XAI methods were employed to analyze various biomedical, medical, and scientific records.