An obvious use case of XAI methods is the highlighting and visualization of relevant features in individual samples or datasets. The XAI group of Fraunhofer HHI is dedicated to putting XAI to use beyond mere visualization. Our recent technical advances allow us to, e.g., efficiently explore machine learning models for modes of failure1, 2 by inspecting whole datasets from the model’s point of view in seconds, using sample-based explanations as a mediator. We are thus able to characterize how AI models work beyond performance measures. Going one step further, the XAI group is actively developing techniques for rectifying misbehaving AI predictors, by unlearning specific prediction strategies or suppressing undesired features within the model itself3.

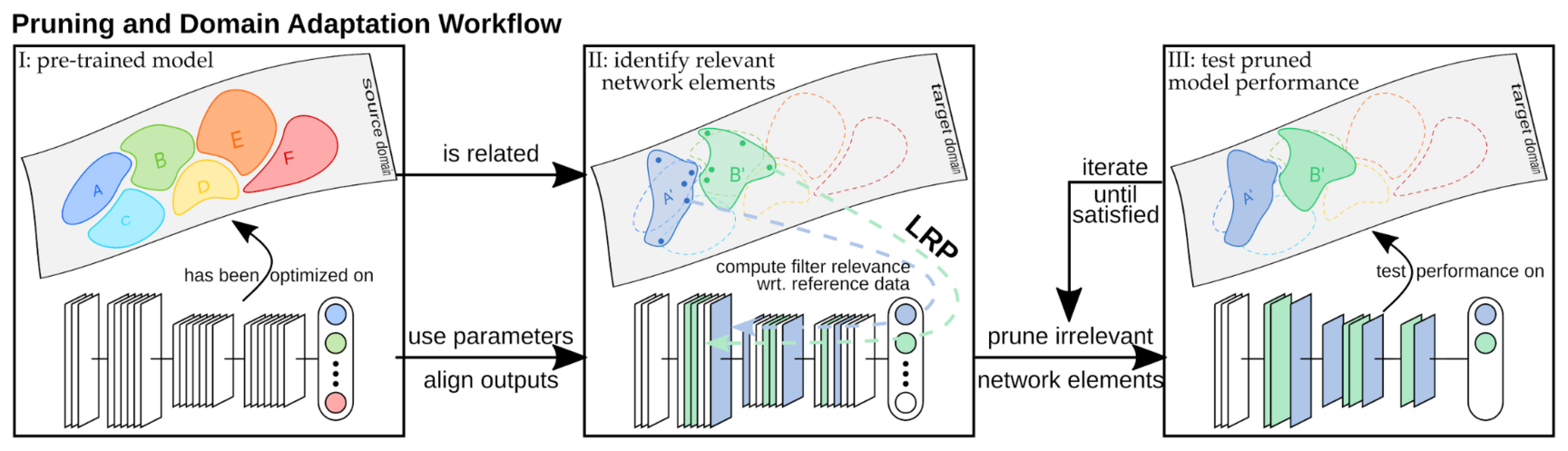

Another line of work employs XAI, specifically LRP, for the effective reduction of overparameterization in modern Deep Learning architectures, by robustly identifying relevant and irrelevant components of a learned predictor.

Our resulting XAI-based pruning solution enables a training-free transferral of pre-trained models to a different application domain with only little required reference data, while simultaneously increasing model efficiency4. In a similar spirit, other recent work from our group and the SUTD Singapore uses LRP to guide a few-shot-learning-based approach for domain adaptation which demonstrably improves the generalization ability of the model5.

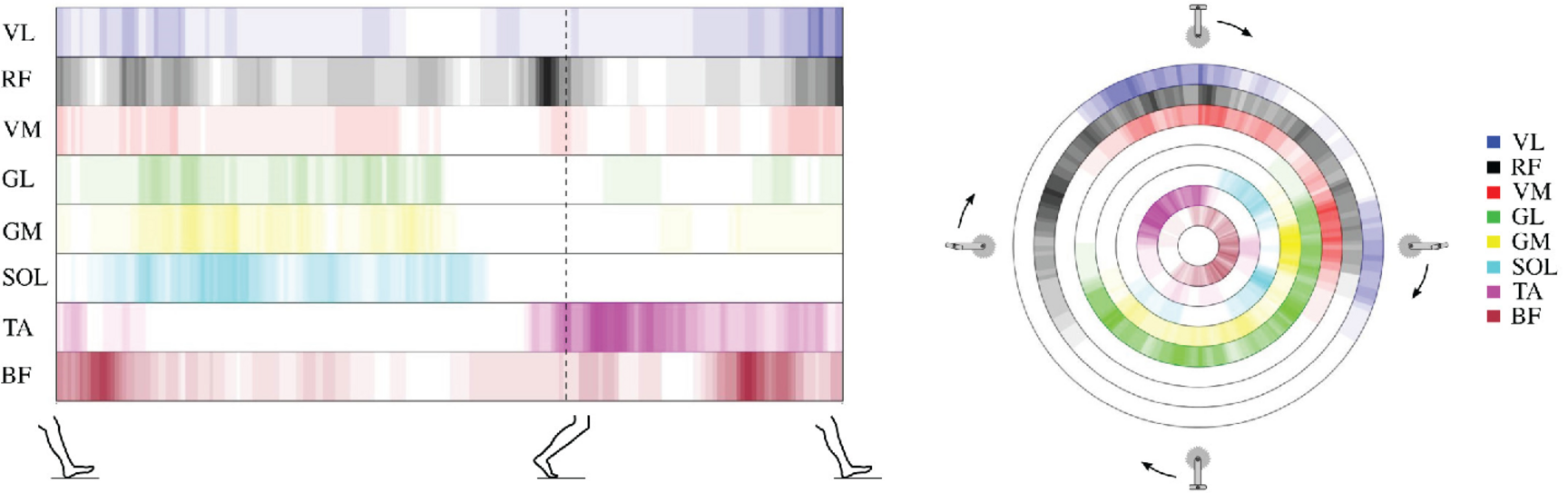

A conjunction of above examples of model-level and dataset-level XAI has resulted in another series of works from the domains of biomechanics6, 7 and epidemiology8 uses our XAI techniques for the extraction of specific movement- and muscle activation patterns unique to human subjects and identifies (causal) combinations of disease-indicating causes as learned by AI models. Findings imply a strong potential of XAI when used in disease prevention and other clinical settings, and, e.g., in conjunction with exoskeletons and other wearable robotics, to highlight for each individual how they activate their muscles in a unique manner.

Publications

- Jiamei Sun, Sebastian Lapuschkin, Wojciech Samek, Yunqing Zhao, Ngai-Man Cheung, Alexander Binder (2021):

Explanation-Guided Training for Cross-Domain Few-Shot Classification,

Milan, Italy, 25th International Conference on Pattern Recognition (ICPR), paper, January 2021 - Jeroen Aeles, Fabian Horst, Sebastian Lapuschkin, Lilian Lacourpaille, François Hug (2021):

Revealing the Unique Features of Each Individual's Muscle Activation Signatures,

Journal Of The Royal Society Interface, vol. 18, no. 174, The Royal Society, p. 20200770, ISSN: 1742-5662, DOI: https://doi.org/10.1098/rsif.2020.0770, 13th January 2021 - Andreas Rieckmann, Piotr Dworzynski, Leila Arras, Sebastian Lapuschkin, Wojciech Samek, Onyebuchi A Arah, Naja H Rod, Claus T Ekstrøm Causes of Outcome Learning:

A causal inference-inspired machine learning approach to disentangling common combinations of potential causes of a health outcome,

medRxiv 10.1101/2020.12.10.20225243 - Christopher J. Anders, David Neumann, Talmaj Marinc, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin (2020):

XAI for Analyzing and Unlearning Spurious Correlations in ImageNet,

Vienna, Austria, ICML'20 Workshop on Extending Explainable AI Beyond Deep Models and Classifiers (XXAI), July 2020 - Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, Klaus-Robert Müller (2019):

Unmasking Clever Hans Predictors and Assessing What Machines Really Learn,

Nature Communications, vol. 10, London, UK, Nature Research, p. 1096, DOI: 10.1038/s41467-019-08987-4, March 2019 - Seul-Ki Yeom, Philipp Seegerer, Sebastian Lapuschkin, Alexander Binder, Simon Wiedemann, Klaus-Robert Müller, Wojciech Samek (2021):

Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning,

Pattern Recognition, vol. 115, page 107899, DOI: doi.org/10.1016/j.patcog.2021.107899 - Fabian Horst, Sebastian Lapuschkin, Wojciech Samek, Klaus-Robert Müller, Wolfgang I. Schöllhorn (2019):

Explaining the Unique Nature of Individual Gait Patterns with Deep Learning,

Scientific Reports, Nature Research, vol. 9, London, UK, Springer Nature, p. 2391, DOI: 10.1038/s41598-019-38748-8, February 2019 - Christopher J. Anders, Leander Weber, David Neumann, Wojciech Samek, Klaus-Robert Müller, Sebastian Lapuschkin (2019):

Finding and Removing Clever Hans: Using Explanation Methods to Debug and Improve Deep Models,

CoRR abs/1912.11425 - Wojciech Samek, Grégoire Montavon, Sebastian Lapuschkin, Christopher J. Anders and Klaus-Robert Müller (2021):

Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications

Proceedings of the IEEE, vol. 109, no. 3, pp. 247-278, March 2021, doi: 10.1109/JPROC.2021.3060483.