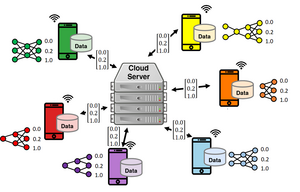

Federated distillation is a new approach for extending distributed neural network scenarios beyond jointly training. Federated distillation introduces fundamentally different communication properties, where participating clients train individual networks instead of iterating one global network. This new approach becomes possible through a different type of distributed network communication, where only network outputs are exchanged between server and clients, instead of full networks or network updates. For this, an unlabeled public data set is required at the central server and the participating clients.

Federated Distillation offers the following benefits;

1. Communication: Data transmission between federated distillation clients and server is reduced to a fraction of data, as only network labels are exchanged.

2. Client flexibility: Clients train a local network version, which may even be a different network with different topology, as long as the network output remains compliant with the federated learning scenario

3. Client data privacy: Local clients train their own network version without exchanging the entire network parameter set, such that high-security data is not exchanged.

4. Error resilience: As server and clients only exchange network outputs, a corrupted client has only limited influence on the overall federated setting and can easier be detected.

Privacy protection is provided through corruption robustness due to the limited data echange in federated distillation. Here, output labels are much more homogeneous and erroneous outlier can be easier detected by a server, in contrast to classical full-network federated averaging systems, where corrupted data is much harder detectable.

Federated distillation also spans the bridge towards novel efficient federated learning scenarios, such as neural architecture evolution. Since clients in federated distillation can leverage individual networks, the specific network topology at a client may also change, such that the entire federated distillation application evolves its network architecture over time into the most inference-efficient structure.

Scientific Publications

1. F. Sattler, A. Marban, R. Rischke, W. Samek. “Communication-Efficient Federated Distillation” CoRR abs/2012.00632, 2020.