Seeing, Modelling and Animating Humans

Realistic human modelling is a challenging task in Computer Vision and Graphics. We investigate new methods for capturing and analyzing human bodies and faces in images and videos as well as new compact models for the representation of facial expressions as well as human bodies and their motion. We combine model-based and image-and video based representations with generative AI models as well as neural rendering.

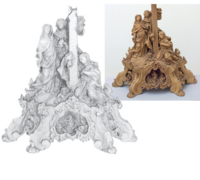

Scenes, Structure and Motion

We have a long tradition in 3D scene analysis and continuously perform innovative research in 3D capturing as well as 3D reconstruction, ranging from highly detailed stereo as well as multi-view images of static objects and scenes, addressing even complex surface and shape properties, over monocular shape-from-X methods, to analyzing deforming objects in monocular video.

Computational Imaging and Video

We perform innovative research in the field of video processing and computational video opening up new opportunities for how dynamic scenes can be analyzed and video footage can be represented, edited and seamlessly augmented with new content.

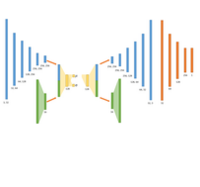

Learning and Inference

Our research combines computer vision, computer graphics, and machine learning to understand images and video data. In our research, we focus on the combination of deep learning with strong models or physical constraints in order to combine the advantages of model-based and data-driven methods.

Augmented and Mixed Reality

Our experience in tracking dynamic scenes and objects as well as photorealistic rendering enables new augmented reality solutions where virtual content is seamlessly blended into real video footage with applications e.g. multi-media, industry or medicine.

Previous Research Projects

We have performed various research projects in the above fields over the years.