Introduction

Automatic traffic analysis has been advancing at an unprecedented rate in the last few years, with the autonomous driving industry championing this technological revolution. Enormous progress has been made recently in terms of both accuracy and efficiency. A great deal of attention from the scientific community has been placed on this topic, which is also reflected in the ever-growing amount of annotated data available in this field.

Traffic Analysis

The concept of traffic analysis involves the understanding of the current state of the traffic and the set of rules that apply to it. In order to achieve this, it is necessary to identify all the relevant participating actors and determine their states, including for example their location and velocity. There are different ways to obtain this information automatically through computer-based systems. Many types of sensors can be used and possibly combined with each other to this end, such as cameras, or LIDAR. In order to extract sensible information from the data provided by these sensors signal processing algorithms are required. Emerging deep-learning-based approaches have proven to be far superior to traditional artificial intelligence algorithms in dealing with many of these complex tasks, enabling the aforementioned technological revolution.

Each of the possible combinations of sensors and algorithms has its own set of advantages and drawbacks, for example low cost, portability, efficiency, scalability or accuracy. In our group, we are focusing in the analysis of monocular images. It is more challenging to work with data from a single camera than it is with multiple images of the same scene (multi-view analysis) or with additional sensors such as LIDAR, which would offer for example additional spatial information, such as depth. Giving up this extra information can mean a loss of accuracy and an extra effort on the algorithm side to obtain the same information about the actors. However, this comes with some benefits, such as the cost reduction that comes from using less sensors or the gain in scalability and portability that the installation of a single camera brings with respect to a whole system of sensors. Sometimes such an approach is necessary due to resource, space or mobility constraints.

Deep Detection and 3D Tracking

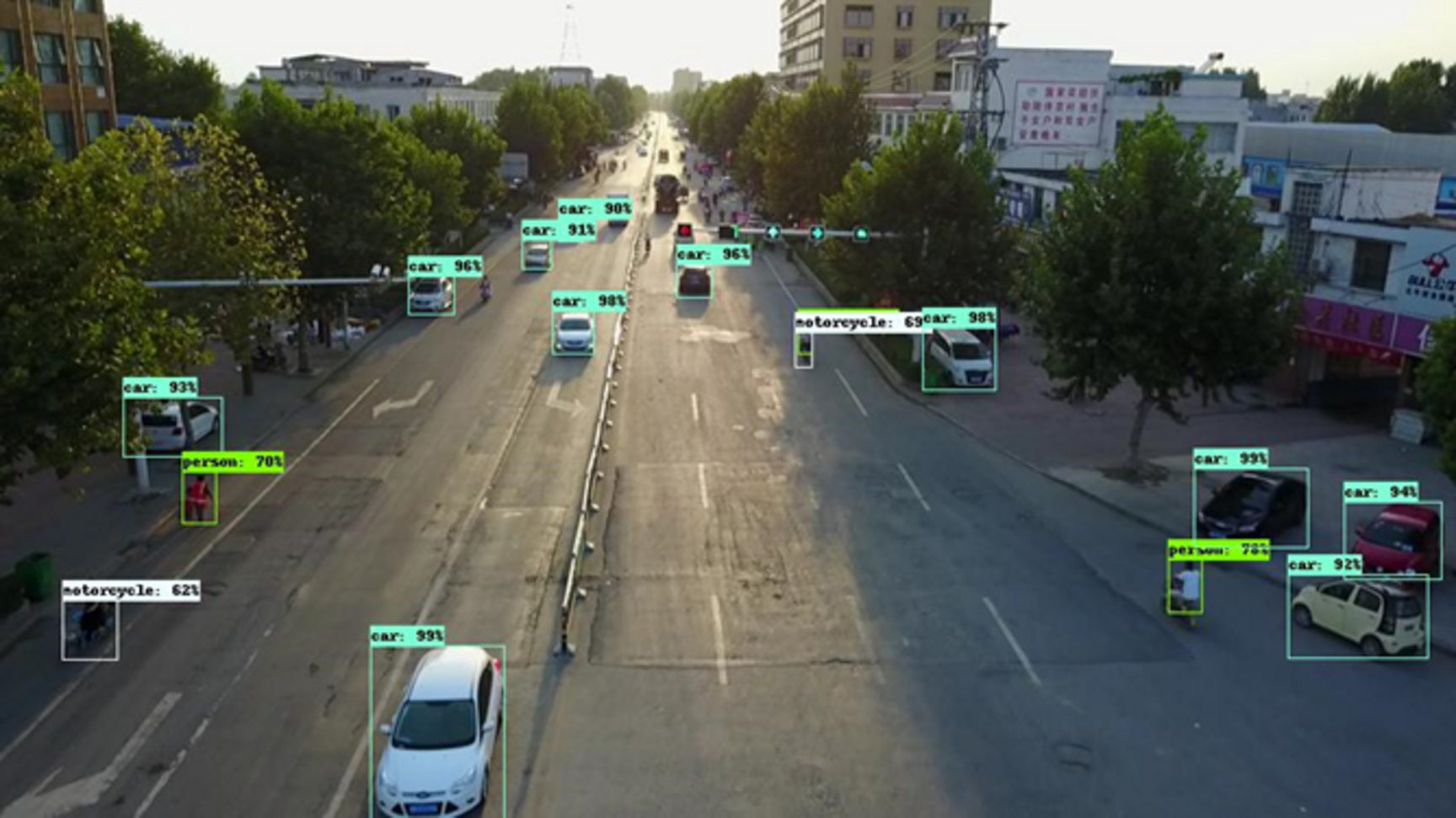

In our research, we have explored state-of-the-art bounding-box detection techniques, which allow finding objects of determined classes (in our case, pedestrians, bicycles, cars, trucks…) in an image. Having the knowledge of the camera position and parameters, this is the first step in finding the real world position of the actors, which would allow for a road scene analysis.

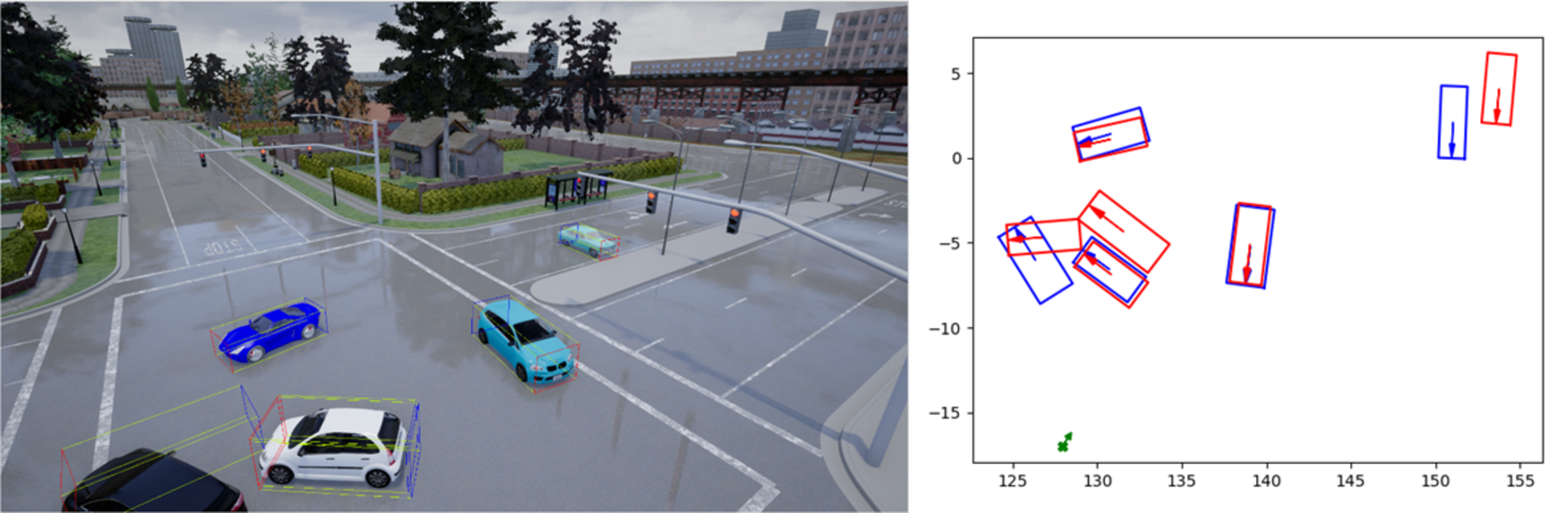

A further step in this direction would be the direct prediction from an image of the 3D pose of the traffic participants by the algorithm. As previously explained, potential spatial information coming from multiple sensors is lost when using a monocular approach, which makes 3D pose prediction a challenging task. However, spatial cues can be found within an image and learnt by a network in order to understand the composition of a scene. We have explored the most recent approaches to this problem and we are adapting them to fit our goals of 3D trajectory detection.

We are also working towards addressing the problem of varying points of view in the images. Most of the annotated data available for training in this field corresponds to images taken from the perspective of a driver, showing the actors from approximately the same level. While this is suitable for applications such as autonomous driving, other problems require cameras to be placed in different positions, such as traffic surveillance. In this case, cameras will have to be placed on existing fixed structures around the roads, which could be traffic lights or streetlights for example. This means that the traffic participants will be observed from above and their appearance on the images will be completely different: a car does not look the same from the front than from above.

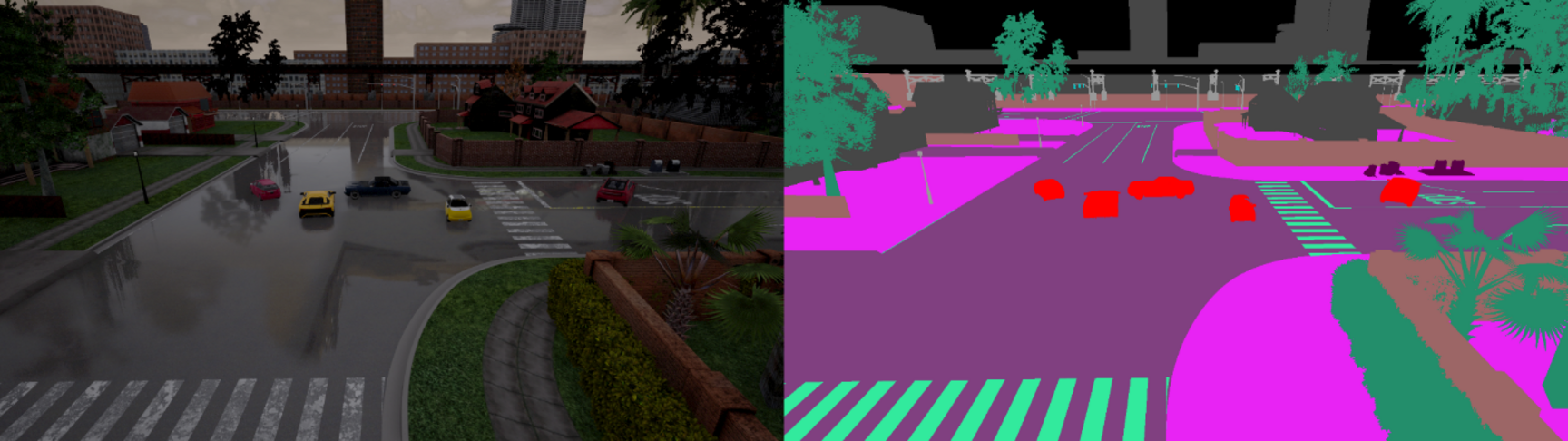

In order to bridge this perspective gap, we are currently exploring the use of synthetic data generation using simulators. Our objective here is to leverage the vast amount of available data from the driver’s point of view for learning the appearance of targets of the different classes and to complement it with synthetically generated data from a more elevated point of view. The main drawback of the generated data is that it does not look exactly like the real data, but it offers many other advantages. One of them is the possibility of obtaining large amounts of perfectly annotated data with precise ground truth information in a non-supervised manner. Another one is the ability to choose exactly what kind of a situation we want to simulate, instead of hoping to be able to record it in a real life, including concrete traffic scenarios or weather conditions, for example.

The value of synthetically generated data and its potential for generalization towards real images has been proven both when used as standalone training material as well as when complementing scarce or otherwise limited real training data.