The knowledge about the pose with six degrees of freedom (6-DoF), meaning rotation and translation in 3D space, of objects in front of a camera is essential for many tasks in the field of augmented reality (AR) and robotic object manipulation. As an example, displaying local assistance information during assembly tasks in AR glasses helps positioning work pieces correctly by annotating the field-of-view with information about necessary movements in real-time.

Multi-Object Tracking

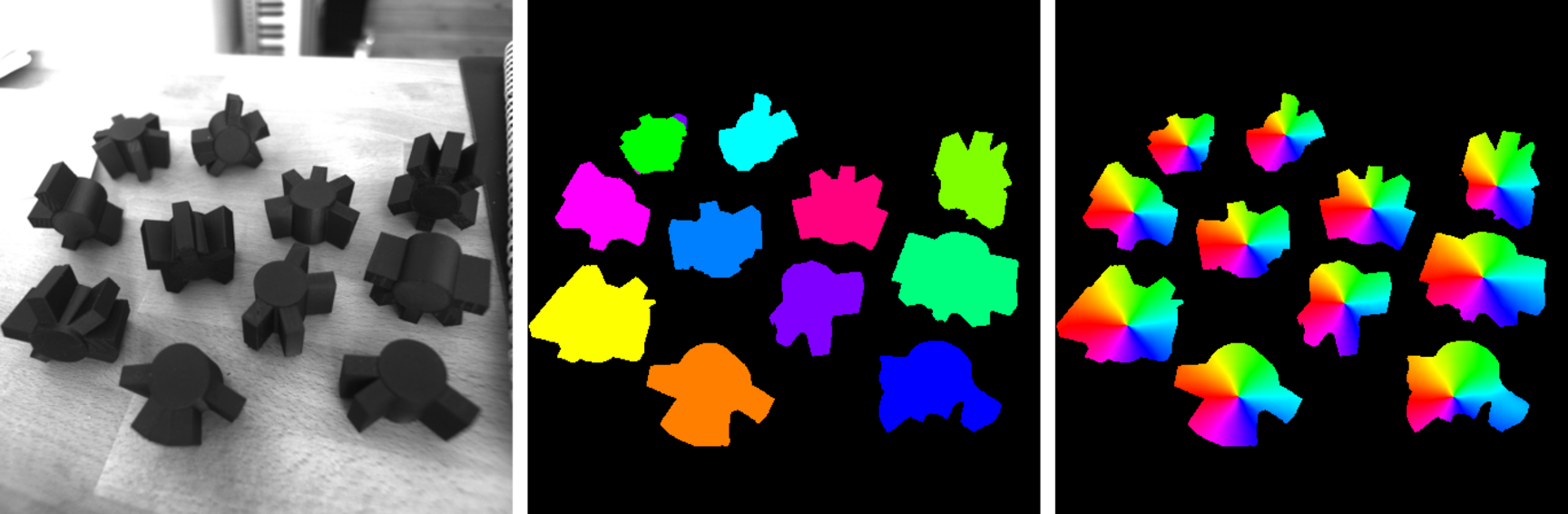

In our research, we focus on the creation of neural networks for multi-object pose estimation from rgb images. A single neural network differentiates between a fixed number of objects and estimates their poses accordingly. In contrast to state-of-the-art procedures, which train an independent network for every object, we only need one network inference, independent of the number of different objects in the image, reducing the computation time. Furthermore, introducing only few specific extra weights per object decreases the amount of memory needed to store the detection network. During training, all objects to be found are shown to the network, simultaneously, making it learn to distinguish similar objects by their geometric differences.

We utilize ideas from neural style transfer and conditional image synthesis and apply them to the task of pose estimation. The pixel-wise object segmentation is decoupled from the actual feature-point regression for pose estimation with 2D-3D correspondences. Rather, the semantic segmentation is guiding the estimation in a dedicated feature-point decoder. Object-specific (de)-normalization parameters are transforming feature-maps based on the spatial arrangement of the segmentation map.

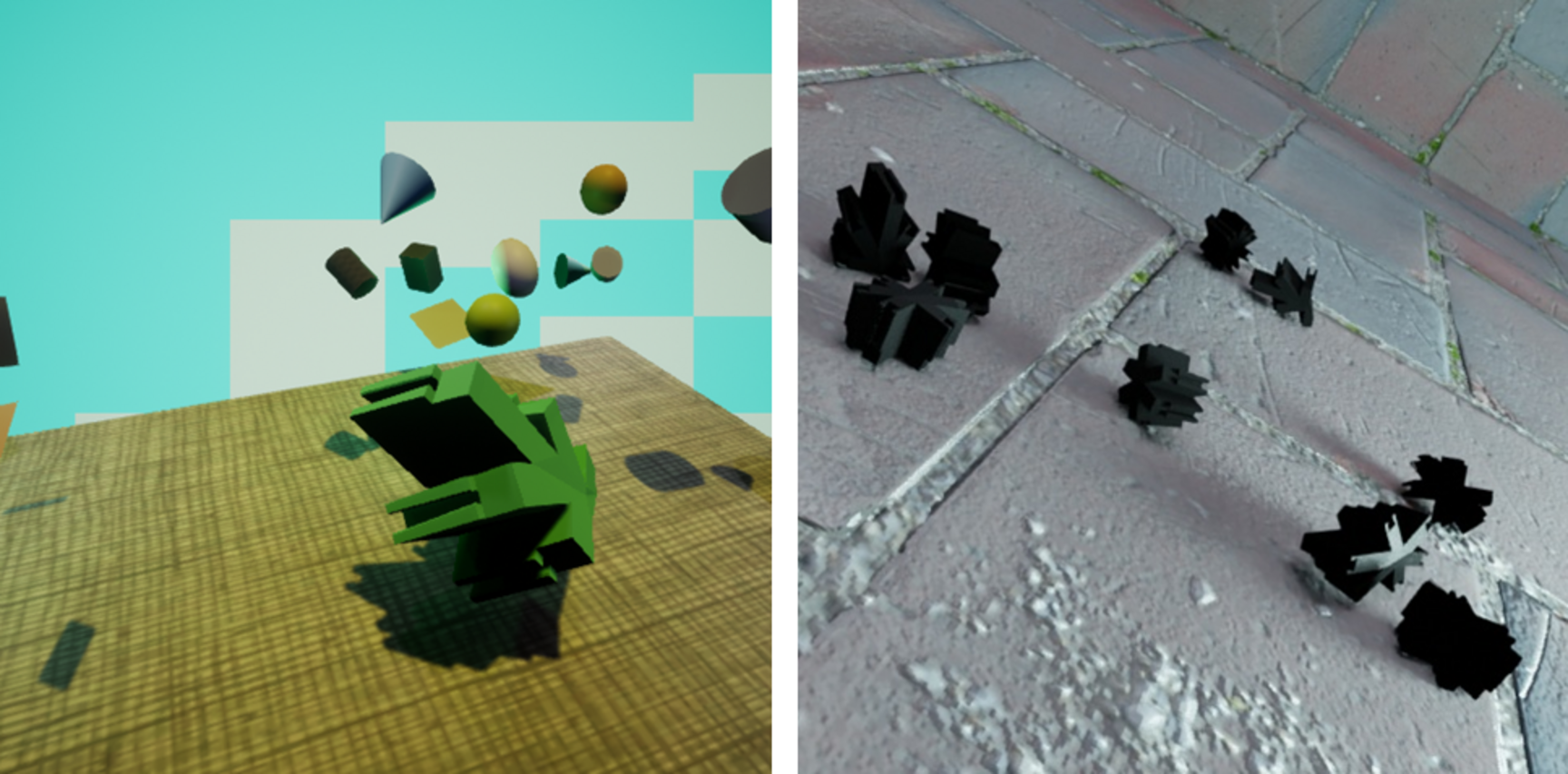

Synthetic Training Data

Training a Convolutional Neural Network (CNN) for object pose estimation requires a large number of images annotated with the associated instance segmentations and object poses. Manual recording and annotation of this training data is very time-consuming and inflexible and not feasible in most real-world scenarios. Instead, the 3D models of the objects are used to generate the training data synthetically by means of computer graphics. This allows the effortless generation of large datasets and the usage of this perfectly annotated data in training. We reduce the degradation of performance due to the difference between real and synthetic images, known as the domain gap, by combining domain randomized – a diverse, randomised determination of object texture, scene lighting and background – and near-photorealistic synthetic images.

Besides using the synthetic datasets for pose estimation of specific objects, we evaluate if the data is suitable to solve the more general tasks of learning generic 2D-3D similarity metrics.

Local Refinement

Especially in the context of AR applications, stable poses and pixel-accurate overlays are of high importance to maintain the illusion of interacting with virtual objects. Using a CNN for pose estimation is very suitable to get initial estimates, but temporal stability of the tracking in video data is hard to handle by the neural network directly.

A markerless tracking, based on the principle of analysis by synthesis, estimates the movement of the component after pose initialization in real-time. Here, the distance of the computer-generated projection of the edges of the model to the image edges is minimised by adjusting the object pose. An Iteratively Reweighted Least Squares (IRLS) optimisation stabilises the procedure in case of occlusions, e.g. by hands. For uniformly coloured (untextured) objects, this matching must be purely geometry-based. A suitable feature are the silhouette and geometry edges of the component, which are easily recognisable in the camera image regardless of the lighting situation.

Niklas Gard, Anna Hilsmann, Peter Eisert

Combining Local and Global Pose Estimation for Precise Tracking of Similar Objects,

International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, virtual, February 2022 arXiv

Projects

This topic is funded in the projects BIMKIT and digitalTwin.