Facial expression analysis and synthesis techniques have received increasing interest in recent years. Numerous new applications in the areas of low bit-rate communication, user-friendly intuitive computer interfaces, film industry and animation, or medicine become available with today's computers. We have developed different algorithms for the tracking of faces and estimation of facial expressions from monocular image sequences. The methods are used for model-based video coding, face cloning, or face morphing.

Model-based Video Coding

In model-based coding, three-dimensional computer models define the appearance of all objects in the scene and only high level motion, deformation, and lighting information is streamed to represent the dynamic changes in the sequence. For the particular case of head-and-shoulder video sequences, a camera captures frames from a person participating, e.g., in a virtual conference. From the first frames, a 3D head model is created specifying shape and texture of the person. This model is encoded and transmitted only once if it has not already been stored at the decoder in a previous session. The encoder then analyzes the video sequence and estimates 3D motion and facial expressions using the 3D head model information. The expressions are represented by a set of facial animation parameters FAPs which are streamed over the network. At the decoder, the 3D head model is deformed according to the FAPs and new frames are synthesized using computer graphics techniques. Since only a few parameters have to be transmitted for each frame of the sequence, bit-rates of about 1 kbit/s can be achieved.

Expression Cloning / Avatar Animation

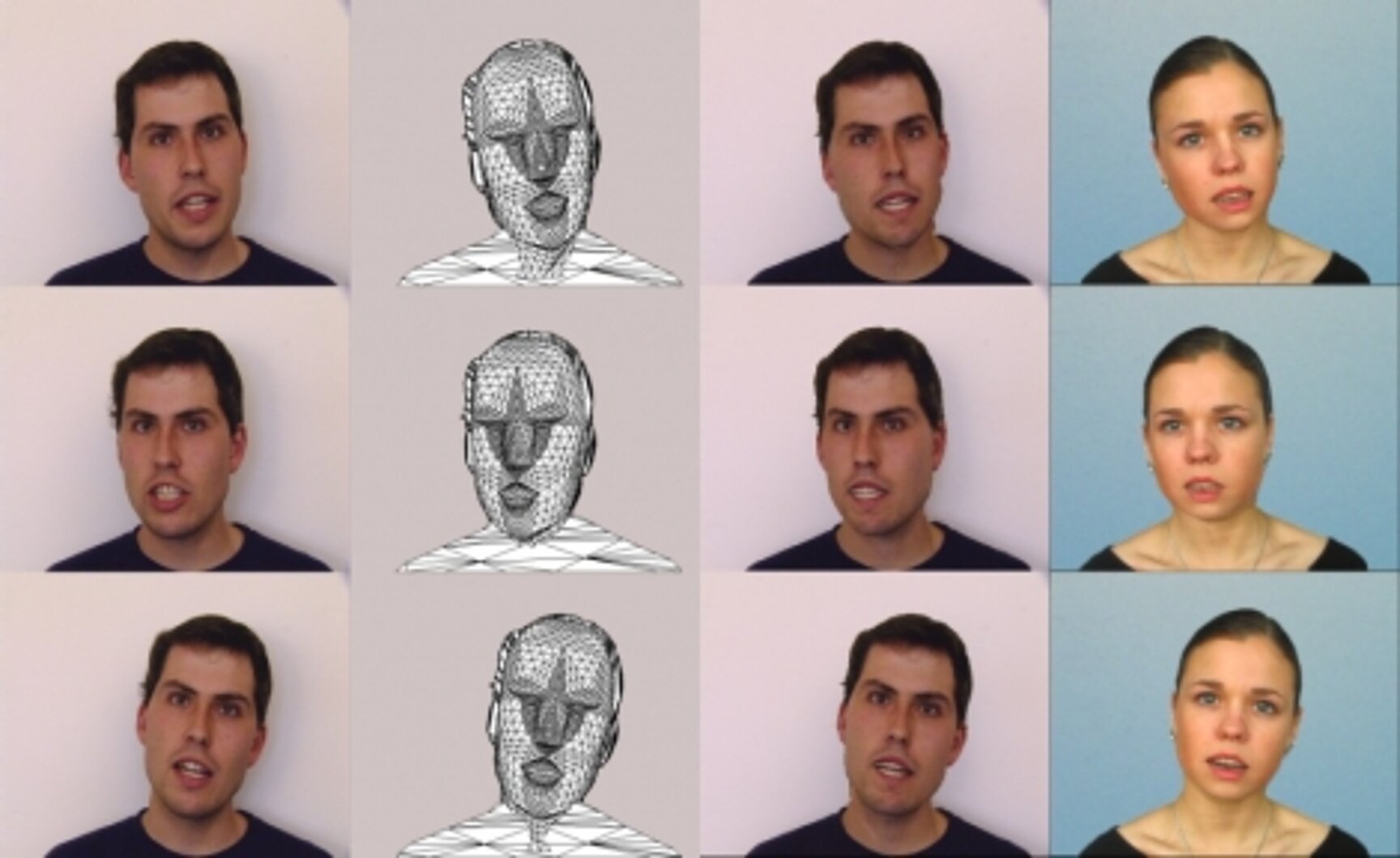

Once 3D descriptions of the scene and facial animation parameter changes over time are available, new sequences can be rendered. It is, e.g., possible to exchange the animated person by real or synthtic characters which is especially interesting in character animation for film productions or for the design of head-and-shoulder animations in web applications or intelligent user interfaces. Below, several examples are given where the original person on the left side is exchanged by another one. The right hand side of the movies are created by rendering a 3D head model with MPEG-4 facial animation parameters estimated from the original sequence.

3D Face Morphing

View morphing can be regarded as a extension to expression cloning. Since the topology of the generic head model is the same for all individual 3D representations, morphing between them can easily be accomplished by linearly displacing the vertices of the initial neutral expression model from the reference to the target description. Facial expression deformations can be applied all the time, not restricting the approach to static images.

Selected Publications

P. Eisert

MPEG-4 Facial Animation in Video Analysis and Synthesis, International Journal of Imaging Systems and Technology, vol. 13, no. 5, pp. 245-256, Mar. 2003.

P. Eisert, B. Girod

Analyzing Facial Expressions for Virtual Conferencing, IEEE Computer Graphics and Applications, vol. 18, no. 5, pp. 70-78, Sep. 1998.