Photorealistic modeling of human characters in computer graphics is of special interest because it is required an many areas, for example in modern movie- and computer game productions. Modeling realistic human models is relatively simple with current modeling software, but modeling an existing real person in detail is still a very cumbersome task.

Our research is focused on realistic and automatic modeling as well as tracking human body motion. A skinning based approach is chosen to support efficient realistic animation. For increased realism, an artifact-free skinning function is enhanced to support blending the influence of multiple kinematic joints. As a result, natural appearance is supported for a wide range of complex motions. To setup a subject-specific model, an automatic and data-driven optimization framework is introduced. Registered, watertight example meshes of different poses are used as input. Using an efficient loop, all components of the animatable model are optimized to closely resemble the training data: vertices, kinematic joints and skinning weights.

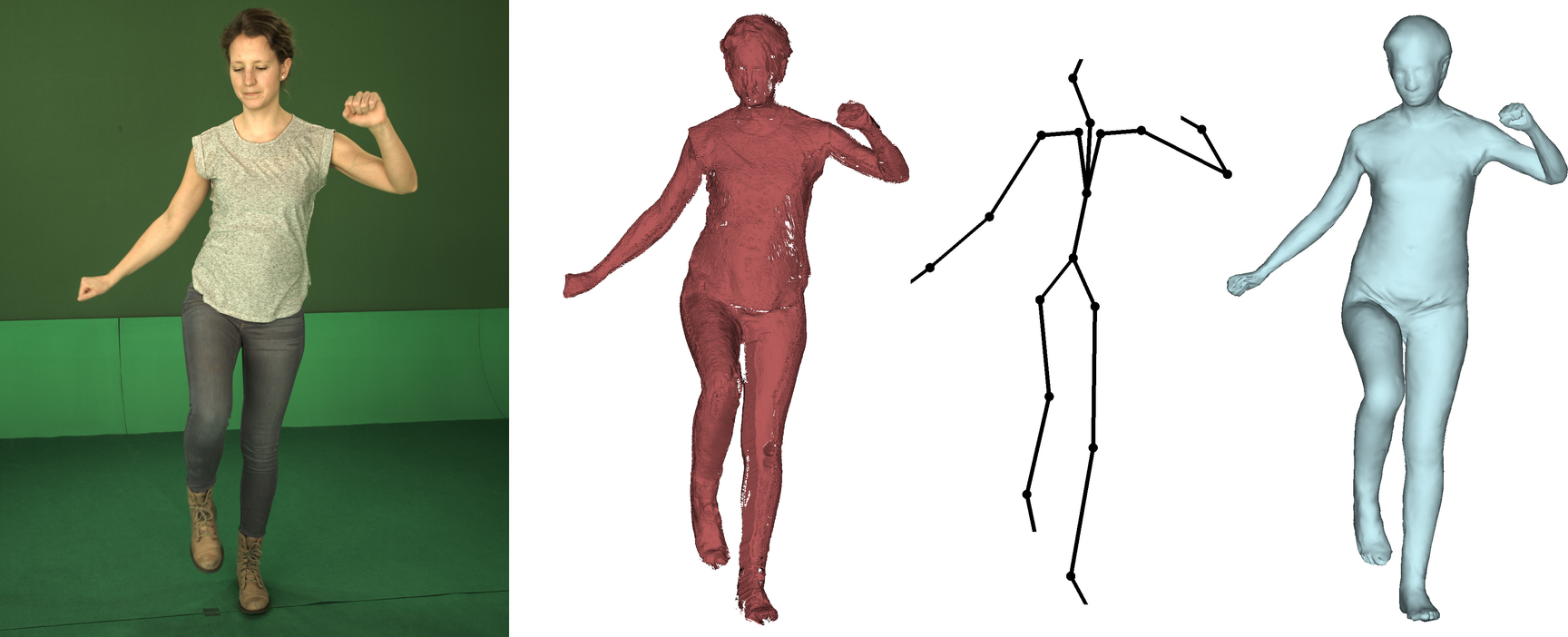

For the purpose of tracking sequences of noisy, partial 3D observations, a markerless motion capture method with simultaneous detailed model adaptation is proposed. The non-parametric formulation supports free-form deformation of the model’s shape as well as unconstrained adaptation of the kinematic joints, thereby allowing to extract individual peculiarities of the captured subject. Integrated a-prior knowledge on human shape and pose, extracted from training data, ensures that the adapted models maintain a natural and realistic appearance. The result is an animatable model adapted to the captured subject as well as a sequence of animation parameters, faithfully resembling the input data.

Altogether, these approaches provide realistic and automatic modeling of human characters accurately resembling sequences of 3D input data. Going one step further, these techniques can be used to directly re-animate recorded 3D sequences by propagating vertex motion changes back to the captured data.

Publications

A. Zimmer, A. Hilsmann, W. Morgenstern, P. Eisert:

Imposing Temporal Consistency on Deep Monocular Body Shape and Pose Estimation, Computational Visual Media, February 2022, Open Access Arxiv

P. Fechteler:

Multi-View Motion Capture based on Model Adaptation, Dissertation, Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät, November 2019.

P. Fechteler, A. Hilsmann, P. Eisert:

Markerless Multiview Motion Capture with 3D Shape Model Adaptation, Computer Graphics Forum 38(6), pp. 91-109, March 2019. doi: 10.1111/cgf.13608

P. Fechteler, L. Kausch, A. Hilsmann, P. Eisert:

Animatable 3D Model Generation from 2D Monocular Visual Data, Proceedings of the 25th IEEE International Conference on Image Processing, Athens, Greece, 7th - 10th October 2018.

P. Fechteler, W. Paier, A. Hilsmann, P. Eisert:

Real-time Avatar Animation with Dynamic Face Texturing, Proceedings of the 23rd International Conference on Image Processing, Phoenix, Arizona, USA, 25th - 28th September 2016.

P. Fechteler, A. Hilsmann, P. Eisert:

Example-based Body Model Optimization and Skinning, Eurographics 2016 Short Paper, Lisbon Portugal, May 2016.

P. Fechteler, W. Paier, and P. Eisert:

Articulated 3D Model Tracking with on-the-fly Texturing, Proceedings of the 21st International Conference on Image Processing (ICIP), Paris, France, 27th - 30th October 2014.

P. Fechteler, A. Hilsmann, and P. Eisert:

Kinematic ICP for Articulated Template Fitting, Proceedings of the 17th International Workshop on Vision, Modeling and Visualization (VMV), Magdeburg, Germany, 12th - 14th November 2012.

P. Fechteler and P. Eisert:

Recovering Articulated Pose of 3D Point Clouds, Proceedings of the 8th European Conference on Visual Media Production (CVMP), London, UK, 16th - 17th November 2011.