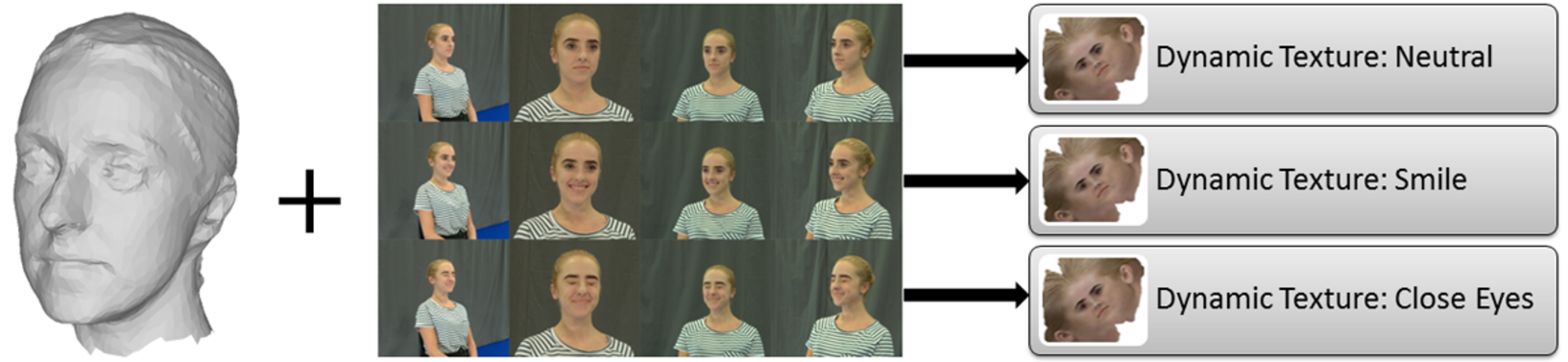

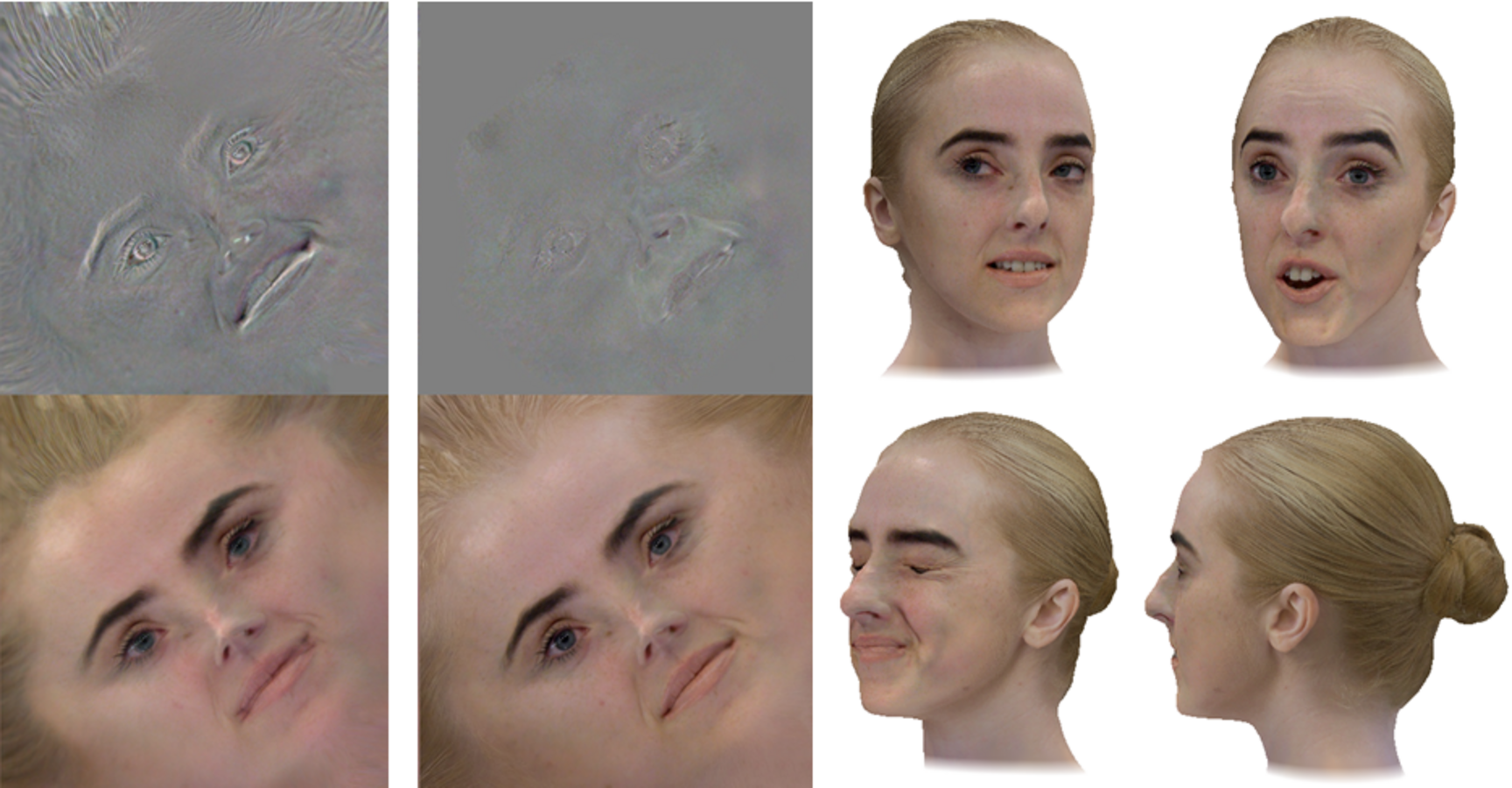

We developed a framework to aid the creation of animateable face/head models for human characters from calibrated (multiview) video streams. Our technique relies mainly on texture based animation to capture all the fine scale details of facial expressions (e.g. small motions, wrinkles ...). Complementary, a low dimensional geometric proxy is used to handle basic lighting and global transformations such as rotation and translations as well as large scale deformations of the face (e.g. moving the jaw).

First we create a geometric proxy of the actor. Then, labels (e.g. smile, talk, blink ...) are assigned to interesting sections in the video stream. For each labelled sequence we track the position and orientation of the proxy in 3D space in order to generate a dynamic texture.

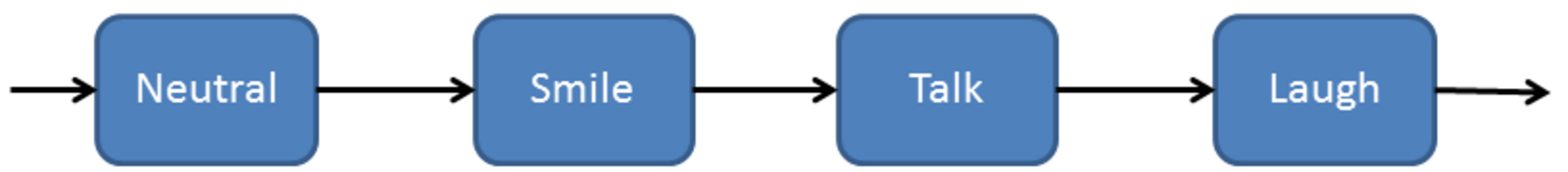

Playing these dynamic textures on the geometry proxy like a video allows creating photorealistic facial performances without the need to model all fine deformations in geometry. Furthermore, by concatenating and/or looping these basic actions we can even synthesize novel facial performances. The only necessary user input is a sequence of facial-expression labels which enables even untrained users to perform facial animation or video editing tasks with photo-realistic results.

To ensure that no artefacts appear at the transition between two sequences (e.g. due to changes in facial expression, small tracking inaccuracies or changes of lighting), we employ a combination of geometric blending and anisotropic cross dissolve. Both blending techniques are applied in texture space. The geometric blending is implemented as a 2D mesh-based warp and matches roughly the facial expression between the last frame of the current texture sequence and the first frame of the next texture sequence. Remaining colour differences are stored in a difference image. Applying the geometric warp and adding the difference image to the n=60 last frames of the current sequence with successively increasing weights creates a smooth transition without noticeable artefacts even when facial expression or lighting conditions change. Since we use high number of frames for blending the additional motion per frame is not disturbing/noticeable.

Videos

Video Based Facial Re-Animation

Realistic Retargeting of Facial Video

Publications

Wolfgang Paier, Markus Kettern, Anna Hilsmann, Peter Eisert

A Hybrid Approach for Facial Performance Analysis and Editing,

IEEE Transactions on Circuits and Systems for Video Technology, vol. 27, no. 4, pp. 784-797, April 2017, doi: 10.1109/tcsvt.2016.2610078

Philipp Fechteler, Wolfgang Paier, Anna Hilsmann, Peter Eisert

Real-time Avatar Animation with Dynamic Face Texturing,

Proceedings of the 23rd International Conference on Image Processing (ICIP 2016), Phoenix, Arizona, USA, Sept. 2016

Wolfgang Paier, Markus Kettern, Anna Hilsmann and Peter Eisert

Video-based Facial Re-Animation, European Conference on Visual Media Production(CVMP), London, UK, 2015.

Wolfgang Paier, Markus Kettern and Peter Eisert

Realistic Retargeting of Facial Video, European Conference on Visual Media Production(CVMP), London, UK, 2014.