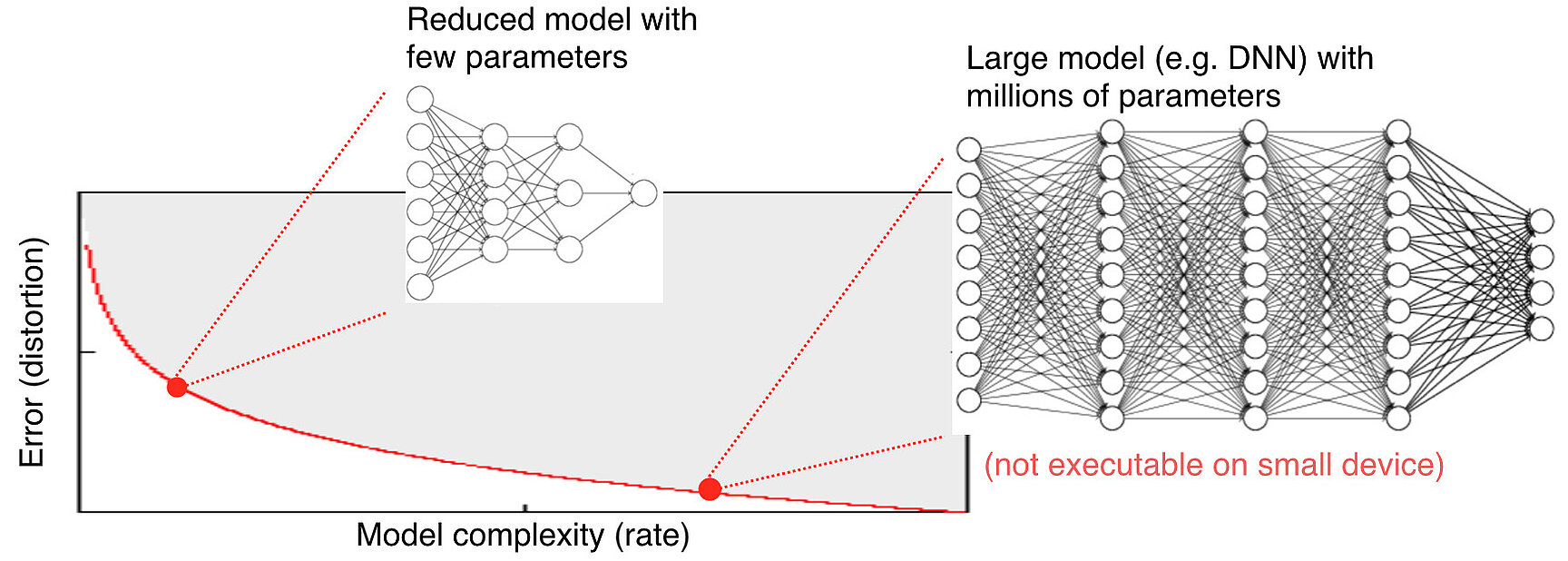

Large Machine Learning models have become the most popular state-of-the-art prediction tools of our days. This encompasses the two major learning technologies in use: deep neural networks (DNNs) possessing millions of weight parameters or support vector machines (SVMs) making use of thousands of support vectors. In practice, such excessive parameter usage becomes a strong disadvantage, as special purpose hardware or larger storage is required. A huge number of applications on mobile devices or embedded systems would emerge, if state-of-the-art machine learning technology could be reduced easily at no or small performance loss.

Our research focuses on the development of the theoretical underpinnings and the algorithmic and practical basis for reducing learning machines in order to open up novel practical application areas for reduced ML methods. This includes the development of techniques for reducing the complexity and increasing the execution efficiency of deep neural networks, as well as the derivation of an information-theoretic description of the problem.

Publication

- W. Samek, S. Stanczak, and T. Wiegand, "The Convergence of Machine Learning and Communications", ITU Journal: ICT Discoveries - Special Issue 1 - The Impact of Artificial Intelligence (AI) on Communication Networks and Services, September 2017.