Intra region-based template matching

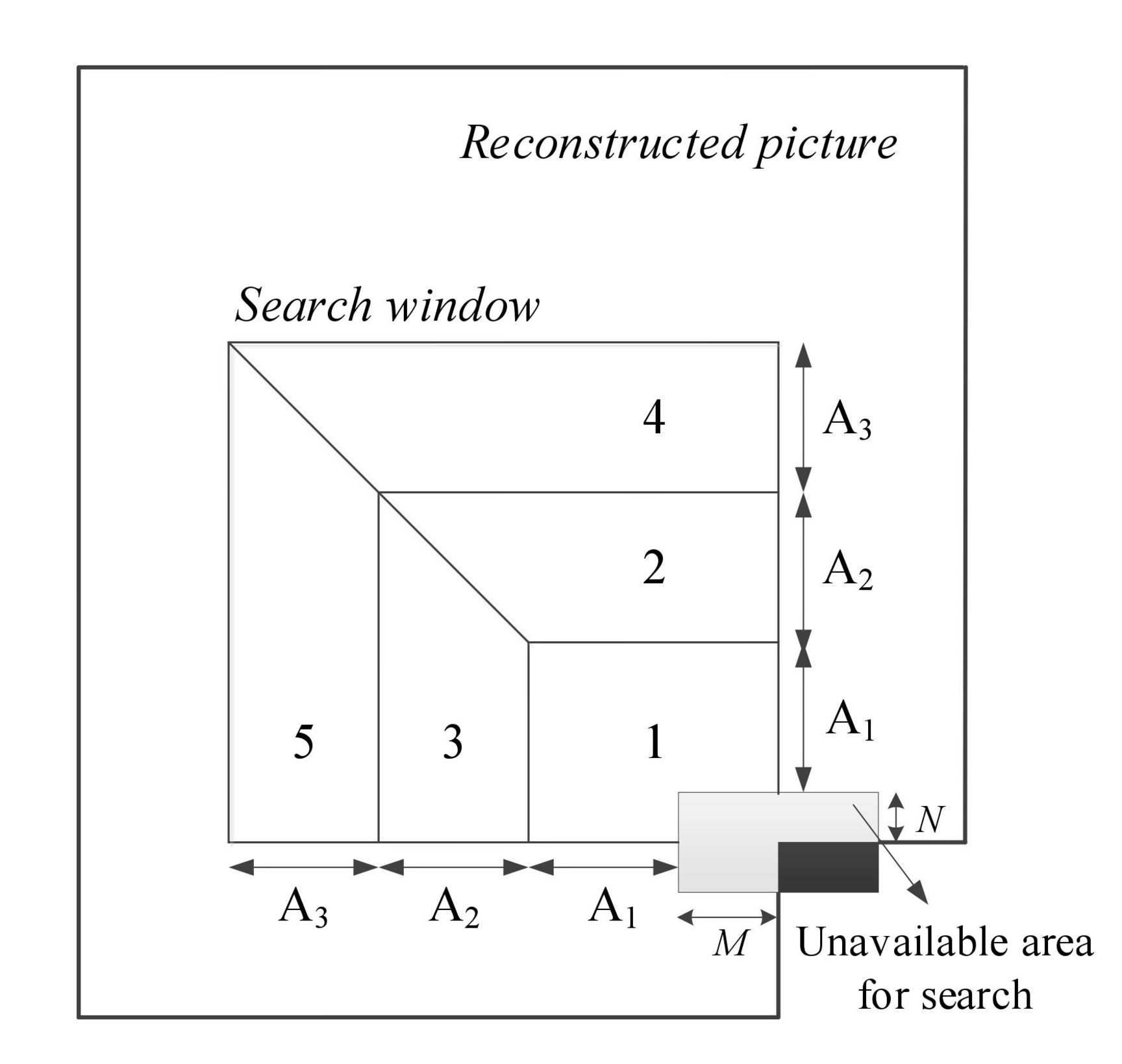

Intra region-based template matching (IRTM) generates a prediction signal for a current block by copying already reconstructed blocks inside the same picture. The location of these blocks is described by integral displacement vectors. The displacement vectors are not explicitly signaled in the bitstream. Instead, they are derived by finding the best match between a template T consisting of reconstructed samples adjacent to a current block and the displaced template. In order to limit the complexity of the template matching search, one typically restricts it to a window. An example with a template (gray) around a current block (white) with different search windows is shown in the figure below.

We developed an algorithm that avoids searching a large picture area due to the sub-partitioning of the search window compared to the conventional template matching algorithms. The region to be searched is indicated by an index that is coded in the bitstream.

References

- G. Venugopal, P. Merkle, D. Marpe, and T. Wiegand, “Fast template matching for intra prediction,” in Proc. IEEE Int. Conf. Image Process. (ICIP), Beijing, Sep. 2017, pp. 1692–1696.

- G. Venugopal, D. Marpe, and T. Wiegand, “Region-based template matching for decoder-side motion vector derivation,” in IEEE Inter-national Conference on Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 2018.

- G. Venugopal, D. Marpe, and T. Wiegand, “Region-based template matching for decoder-side motion vector derivation,” in IEEE Inter-national Conference on Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 2018.

- G. Venugopal, P. Helle, K. Müller, D. Marpe, and T. Wiegand, "Hardware-friendly intra region-based template matching for VVC", in 1100 Proc. Data Compress. Conf. (DCC), Snowbird, UT, USA, Mar. 2019, pp. 606.

Multi-hypothesis inter-prediction

Multi-hypothesis inter prediction refers to the generation of an inter-picture prediction signal by linearly superimposing more than one motion-compensated signals, called hypotheses. This approach can decrease the impact of noise that might be present in each single hypothesis on the overall prediction. Thus, it can lead to a higher prediction quality. In previous standards, the maximal number of hypotheses allowed is restricted to two. We generalized this concept so that a linear combination of an arbitrary number of n hypotheses is supported. Thus, if p1 is a single motion-compensated signal, we inductively added hypothesis hi+1 and defined

pi+1 = (1 − αi+1)pi + αi+1hi+1,

until i = n − 1, which results in the prediction signal pn. Here, αi+1 is chosen from a set of predefined, experimentally determined, weighting factors that also contains negative weights. The additional motion information required is either explicitly transmitted or inferred in the merge mode.

References

- M. Winken, C. Bartnik, H. Schwarz, D. Marpe, and T. Wiegand, “Multi-Hypothesis Inter Prediction,” doc. JVET-J0041, San Diego, Apr. 2018.

Signal Adaptive Diffusion Filter

We applied signal adaptive diffusion filters to inter- or intra-prediction signals. The idea of these filters is to increase the prediction quality by smoothing the prediction signals in such a way that noise is removed but edges are kept. One way to realize such a filtering process is to define Ft(pred), t ≥ 0 as a solution of

∂⁄∂t Ft(pred)(x, y) = div(c(x, y) ∇Ft(pred)(x, y)),

with F0(pred) = pred and div is the divergence operator. One tries to define the function c such that uniform smoothing is limited to image regions having similar sample values and is not carried out across edges. In order to detect such edges, one invokes smoothed versions of the gradient of pred. In particular, the function c depends on the prediction signal pred itself.

In the figure above a comparison of the two filter types is shown for a particular example. It is signaled in the bitstream if diffusion is to be applied on a given block and which diffusion filter type is chosen.

References

- J. Rasch, J. Pfaff, M. Schäfer, A. Henkel, H. Schwarz, D. Marpe, and T. Wiegand. A Signal Adaptive Diffusion Filter For Video Coding: Improved Parameter Selection. APSIPA Transactions on Signal and Information Processing, 2019.

- J. Rasch, J. Pfaff, M. Schäfer, H. Schwarz, M. Winken, M. Siekmann, D. Marpe, and T. Wiegand, “A Signal Adaptive Diffusion Filter for Video Coding,” in Proc. IEEE Picture Coding Symp. (PCS), San Francisco, June 2018, pp. 131–133.

- J. Pfaff, J. Rasch, M. Schäfer, H. Schwarz, A. Henkel, M. Winken, M. Siekmann, D. Marpe, and T. Wiegand, “Signal Adaptive Diffusion Filters for Video Coding,” doc. JVET-J0038, San Diego, Apr. 2018.

Prediction Refinement using DCT-Thresholding

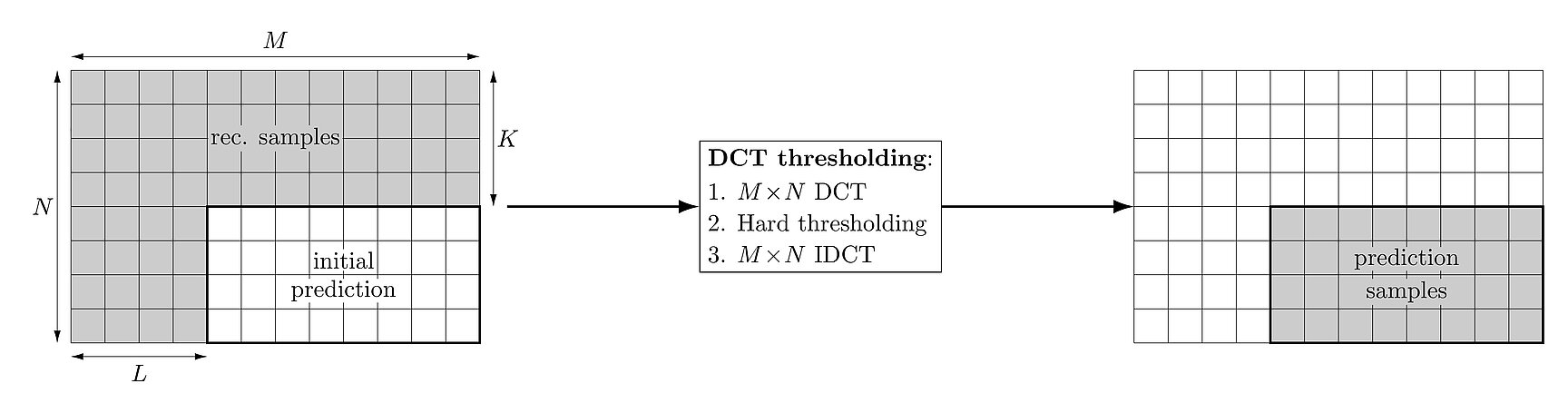

We designed a thresholding method which aims to improve the prediction quality by aligning a given prediction signal with reconstructed samples in some neighborhood. We do this by exploiting sparsity properties in the DCT domain which are typical for natural images. This approach works as a texture synthesis tool to improve the prediction.

As illustrated in the figure above, we start with a prediction signal p that can arise either by motion-compensated or spatial intra prediction. Then, we extend p to an enlarged prediction y using the reconstructed samples left and above. We transform y with the orthogonal discrete cosine transform and obtain Y. For a threshold value τ > 0, we set to zero all components of Y whose absolute value is smaller than τ. Transforming the result back and restricting to the given blocks yeals the modified prediciton signal. The threshold as well as the extension sizes are signalled in the bit-stream.

References

- M. Schäfer, J. Pfaff, J. Rasch, T. Hinz, H. Schwarz, T. Nguyen, G. Tech, D. Marpe, and T. Wiegand, “Improved Prediction via Thresholding Transform Coefficients,” in Proc. IEEE Int. Conf. Image Process. (ICIP), Athens, Oct. 2018, pp. 2546–2549.