Powerful machine learning algorithms such as deep neural networks (DNNs) are recently able to harvest extremely large amounts of training data and can thus achieve record performances in many research fields. At the same time, DNNs are generally conceived as black box methods, because it is difficult to intuitively and quantitatively understand the result of their inference, i.e. for an individual novel input data point, what made the trained DNN model arrive at a particular response. Since this lack of transparency can be a major drawback, e.g., in medical applications, research towards an explainable artificial intelligence (XAI) has recently attracted increasing attention.

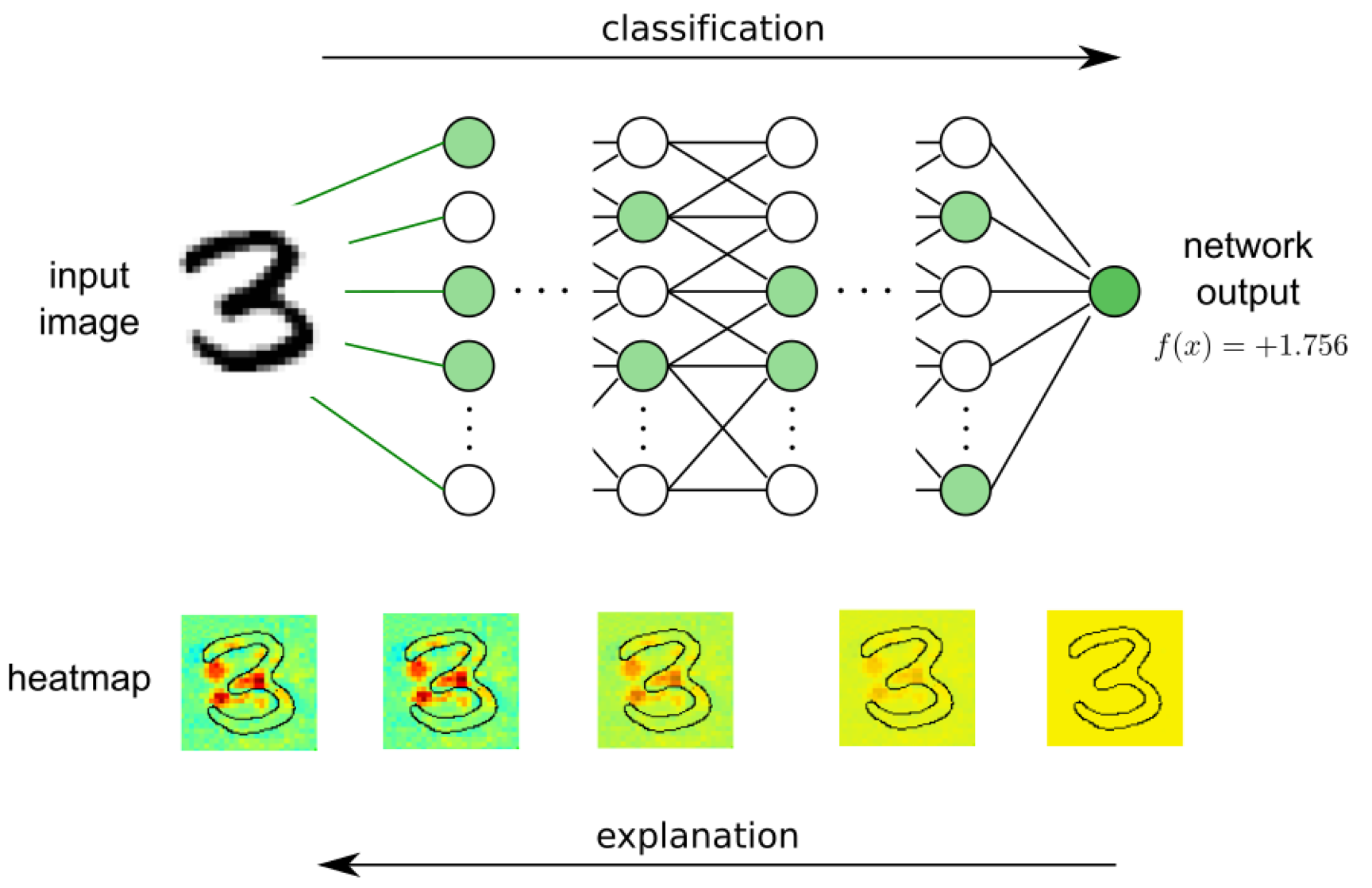

Our work focuses on the development of methods for visualizing, explaining and interpreting deep neural networks and other black box machine learning models. We developed a principled approach to decompose a classification decision of a DNN into pixel-wise relevances indicating the contributions of a pixel to the overall classification score. The approach is derived from layer-wise conservation principles and leverages the structure of the neural network. Due to its generality, our approach can be applied to a variety of tasks and network architectures.

Publications

- W. Samek, T. Wiegand, and K.-R. Müller, "Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models", ITU Journal: ICT Discoveries - Special Issue 1 - The Impact of Artificial Intelligence (AI) on Communication Networks and Services, September 2017.

- G. Montavon, W. Samek, and K.-R. Müller, "Methods for Interpreting and Understanding Deep Neural Networks", arXiv:1706.07979, June 2017.

- G. Montavon, S. Lapuschkin, A. Binder, W. Samek, and K.-R. Müller, "Explaining Nonlinear Classification Decisions with Deep Taylor Decomposition", Pattern Recognition, vol. 65, pp. 211–222, May 2017.

- L. Arras, F. Horn, Grégoire Montavon, K.-R. Müller, and W. Samek, ""What is Relevant in a Text Document?": An Interpretable Machine Learning Approach" PLOS ONE, vol. 12, no. 8, pp. e0181142, August 2017.

- S. Lapuschkin, A. Binder, K.-R. Müller, and W. Samek, "Understanding and Comparing Deep Neural Networks for Age and Gender Classification", Proceedings of the ICCV'17 Workshop on Analysis and Modeling of Faces and Gestures (AMFG), Venice, Italy, October 2017.

- L. Arras, G. Montavon, K.-R. Müller, and W. Samek, "Explaining Recurrent Neural Network Predictions in Sentiment Analysis", Proceedings of the EMNLP'17 Workshop on Computational Approaches to Subjectivity, Sentiment & Social Media Analysis (WASSA), Copenhagen, Denmark, pp. 159-168, September 2017.

- V. Srinivasan, S. Lapuschkin, C. Hellge, K.-R. Müller, and W. Samek, "Interpretable human action recognition in compressed domain", Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, pp. 1692-96, March 2017.

- W. Samek, A. Binder, G. Montavon, S. Lapuschkin, and K.-R. Müller, "Evaluating the visualization of what a Deep Neural Network has learned", IEEE Transactions on Neural Networks and Learning Systems, August 2016.

- S. Lapuschkin, A. Binder, G. Montavon, K.-R. Müller, and W. Samek, "Analyzing Classifiers: Fisher Vectors and Deep Neural Networks", Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 2912-20, June 2016.

- I. Sturm, S. Lapuschkin, W. Samek, and K.-R. Müller, "Interpretable Deep Neural Networks for Single-Trial EEG Classification", Journal of Neuroscience Methods, vol. 274, pp. 141–145, December 2016.

- F. Arbabzadeh, G. Montavon, K.-R. Müller, and W. Samek, "Identifying Individual Facial Expressions by Deconstructing a Neural Network", Pattern Recognition - 38th German Conference, GCPR 2016, Lecture Notes in Computer Science, vol. 9796, pp. 344-54, Springer International Publishing, August 2016.

- S. Bach, A. Binder, G. Montavon, F. Klauschen, K.-R. Müller, and W. Samek, "On Pixel-wise Explanations for Non-Linear Classifier Decisions by Layer-wise Relevance Propagation", PLOS ONE, vol. 10, no. 7, pp. e0130140, July 2015.