DOMEconnect

Streaming of interactive, real-time generated media between immersive venues

September 2022 - February 2025

AI AR Microscope

Assistive Artificial Intelligence and Augmented Reality Tools for Microscopic Diagnostics in Dermatology and Microscopic Surgery

Duration: July 2023 - January 2025

NalamKI

Sustainable agriculture using artificial intelligence

January 2021 – December 2024

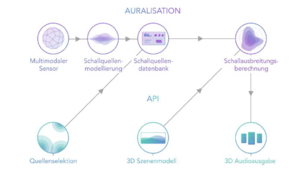

AMAVI

Acoustical Modeling for Auralizations in Virtual Infrastructure

November 2022 - October 2024

COMPAIR

Community Observation Measurement & Participation in AIR Science

November 2021 - October 2024

SmartSite

BIM-compliant, cooperative and automated planning and control processes on the digital construction site

September 2022 – August 2024

Mouse2Dat

Development of Automated Tools for the Analysis of Imaging Studies in the Context of Tracer Development

Duration: August 2022 – July 2024

Mouse2Dat is co-funded by Bundesministerium für Wirtschaft und Klimaschutz (BMWK - ZIM Project)

read more

VoluProf

Volumetric professor for omnipresent and user-optimized teaching in mixed reality

September 2021 – August 2024

BIMKIT

BIMKIT: As-built modeling of buildings and infrastructure using AI to generate digital twins

January 2021 – June 2024

FakeID

FakeID: Video analysis using artificial intelligence to detect false and manipulated identities

April 2021 - March 2024

TeMoRett

TeMoRett: Technology-assisted motor rehabilitation for people with Rett syndrome

February 2021 – January 2024

KIPos

KIPos: AI-guided postoperative care of cardiac surgery patients.

February 2021 - January 2024

Gemimeg II

Gemimeg II: Sichere und robuste kalibrierte Messsysteme für die digitale Transformation

August 2020 – December 2023

Read more

VoViREx

Volumetric Virtual Reality Experience with a survivor of the Holocaust

December 2021 - December 2023

Read more

SAGA

System for Algorithmic Generation of Synthesis Methods for Procedural Audio Simulations

January 2021 - December 2023

INVICTUS

INVICTUS: Innovative Volumetric Capture and Editing Tools for Ubiquitous Storytelling

October 2020 – December 2022

Read more

KIVI

KIVI: AI-based Generation of interactive Volumetric Assets

October 2020 – September 2022

Read more

D4Fly

D4Fly: Detecting Document frauD and iDentity on the fly

September 2019 – August 2022

Read more

Research Alliance Cultural Heritage

1. Phase: January 2016 - December 2018

2. Phase: December 2019 – November 2022

Funded by Fraunhofer Directorate, Prof. Raimund Neugebauer.

read more

Virtual LiVe

Virtualization of Live Events via Audiovisual Immersionen

January 2021 – December 2021

Read more

COMPASS

Comprehensive Surgical Landscape Guidance System for Immersive Assistance in Minimally-invasive and Microscopic Interventions

September 2018 - September 2021

Read more

digitalTWIN

Digital Tools and Workflow Integration for Building Lifecycles

March 2018 – February 2021

Read more

3D-Finder

Robuste generische 3D-Gesichtserfassung für Authentifizierung und Identitätsprüfung

July 2017- January 2021

Read more

TeleSTAR

Telepresence for Surgical Assistance and Training using Augmented Reality

January 2020 - December 2020

Read more

SS3D++

SS3D++: Single Sensor 3D ++

January 2020 – December 2020

Content4All

Personalised Content Creation for the Deaf Community in a Connected Digital Single Market

September 2017 - November 2020

Read more

LZDV

The Berlin Center for Digital Transformation

Phase I: July 2016 – June 2018

Phase II: July 2018 – June 2020

Read more

EASY-COHMO

Ergonomics Assistance Systems for contactless Human-Machine-Operation

January 2017 – December 2019

Read more

VReha

Virtual worlds for digital diagnostics and cognitive rehabilitation

December 2017 – November 2019

Read more

PersonVR

Embedding of real persons in Virtual Reality productions using 3D videoprocessing

July 2017 – June 2019

Read more

ANANAS

Anomaly detection to prevent attacks on facial recognition systems

June 2016 – May 2019

Read more

3D-LivingLab

Demonstrator 3D-LivingLab for the 3Dsensation research alliance

March 2017 – February 2019

Read more

MEF AuWiS

Audio-Visual Capturing and Reproduction of Traffic Noise for the Simulation of Noise Mitigation Methods

January 2017 – December 2018

Read more

REPLICATE

cReative-asset harvEsting PipeLine to Inspire Collective-AuThoring and Experimentation

January 2016 – December 2018

Read more

M3D

Mobile 3D Capturing and 3D Printing for Industrial Applications

April 2016 – September 2018

Read more

MEF ESMobS

Self-research project for medium-sized companies: Even layered Structures for Micro optics of broad imaging Systems

January 2016 – December 2017

Read more

VENTURI

October 2011 – September 2014

To date, convincing AR has only been demonstrated on small mock-ups in controlled spaces; we haven’t yet seen key conditions being met to make AR a booming technology: seamless persistence and pervasiveness. VENTURI addresses such issues, creating a user appropriate, contextually aware AR system, through a seamless integration of core technologies and applications on a state-of-the-art mobile platform. VENTURI will exploit, optimize and extend current and next generation mobile platforms; verifying platform and QoE performance through life-enriching use-cases and applications to ensure device-to-user continuity.

SCENE

October 2011 – September 2014

SCENE will develop novel scene representations for digital media that go beyond the ability of either sample based (video) or model-based (CGI) methods to create and deliver richer media experiences. The SCENE representation and its associated tools will make it possible to capture 3D video, combine video seamlessly with CGI, manipulate and deliver it to either 2D or 3D platforms in either linear or interactive form.

EMC²

October 2011 – February 2014

The 3DLife consortium partners committed on building upon the project’s collaborative activities and establishing a sustainable European Competence Centre, namely Excellence in Media Computing and Communication (EMC²). Within the scope of EMC², 3DLife will promote additional collaborative activities such as an Open Call for Fellowships a yearly Grand Challenge, and a series of Distinguished Lectures.

REVERIE

September 2011 - May 2015

Reverie is a Large Scale Integrating Project funded by the European Union. The main objective of Reverie is to develop an advanced framework for immersive media capturing, representation, encoding and semi-automated collaborative content production, as well as transmission and adaptation to heterogeneous displays as a key instrument to push social networking towards the next logical step in its evolution: to immersive collaborative environments that support realistic inter-personal communication.

FreeFace

June 2011 – December 2013

FreeFace will develop a system for assisting the visual authentication of persons employing novel security documents which can store 3D representations of the human head. A person passing a security gate will be recorded by multiple cameras and a 3D representation of the person’s head will be created. Based on this representation, different types of queries such as pose and lighting adaption of either the generated or the stored 3D data will ease manual as well as automatic authentication.

Camera deshaking for endoscopic video

June 2011– February 2011

Endoscopic videokymography is a method for visualizing the motion of the plica vocalis (vocal folds) for medical diagnosis with time slice images from endoscopic video. The diagnostic interpretability of a kymogram deteriorates if camera motion interferes with vocal fold motion, which is hard to avoid in practice. For XION GmbH, a manufacturer of endoscopic systems, we developed an algorithm for compensating strong camera-to-scene motion in endoscopic video. Our approach is robust to low image quality, optimized to work with highly nonrigid scenes, and significantly improves the quality of vocal fold kymograms.

FascinatE

February 2010 – July 2013

The FascinatE (Format-Agnostic SCript-based INterAcTive Experience) project will develop a system to allow end-users to interactively view and navigate around an ultra-high resolution video panorama showing a live event, with the accompanying audio automatically changing to match the selected view. The output will be adapted to their particular kind of device, covering anything from a mobile handset to an immersive panoramic display. At the production side, this requires the development of new audio and video capture systems, and scripting systems to control the shot framing options presented to the viewer. Intelligent networks with processing components will be needed to repurpose the content to suit different device types and framing selections, and user terminals supporting innovative interaction methods will be needed to allow viewers to control and display the content.

Co-funded by the European Commission's Seventh Framework Programme

3DLife

January 2010 – June 2013

3DLife is a funded by the European Union research project, a Network of Excellence (NoE), which aims at integrating research that is currently conducted by leading European research groups in the field of Media Internet. 3DLife's ultimate target is to lay the foundations of a European Competence Centre under the name "Excellence in Media Computing & Communication" or simply EMC². Collaboration is in the core of the 3DLife Network of Excellence.

- Computer Vision & Graphics Group

- www.3dlife-noe.eu/3DLife

MUSCADE

January 2010 – December 2012

MUSCADE (Multimedia Scalable 3D for Europe) will create major innovations in the fields of production equipment and tools, production, transmission and coding formats allowing technology independent adaptation to any 3D display and transmission of multiview signals while not exceeding double the data rate of monoscopic TV, and robust transmission schemes for 3DTV over all existing and future broadcast channels.

Co-funded by the European Commission's Seventh Framework Programme.

Cloud Rendering

December 2009 – August 2010

In the project CloudRendering, we investigated methods to efficiently encode synthetically produced image sequences in cloud computing environments, enabling interactive 3D graphics applications on computationally weak end devices. One goal was to investigate possibilities to speedup the encoding process by exploiting different levels of parallelism: SIMD, multi-core CPUs/GPUs and multiple connected computers. Additional speedup was achieved by exploiting knowledge from the synthetic nature of the images paired with access to the 3D image generation machinery. The study was performed for Alcatel-Lucent.

AVATecH

June 2009 – May 2012

The goal of AVATecH - Advancing Video Audio Technology in Humanities Research - is to investigate and develop technology for semi-automatic annotation of audio and video recordings used in humanities research. Detectors that will be available via interactive annotation tools and also via batch processing can help for example with chunking, tagging, annotation and search.

Joint Max-Planck/Fraunhofer project

3D@Sat

June 2009 – July 2011

3D@SAT will review and investigate the potential of future 3D Multiview (MVV) / Free viewpoint Video (FVV) technologies, build up a simulation system for 3D services over satellite and disseminate its findings to relevant fora (e.g. DVB 3D-SM, DVB TM–AVC, ITU-T/VCEG, ISO/MPEG, SMPTE, 3D@Home etc.).

Funded by the European Space Agency (ESA).

Fraunhofer Secure Identity Innovation Cluster

January 2009 – December 2011

The Fraunhofer Secure Identity Innovation Cluster is an alliance of five Fraunhofer Institutes, five universities and 12 private sector companies, supported by the federal states of Berlin and Brandenburg. The aim of this joint research & development project is to deliver technologies, processes and products that enable clear and unambiguous identification of persons, objects and intellectual property both in the real and the virtual world, thus enabling owners and users of identity to have individual control over clearly defined, recognizable identities. HHI is working on the passive 3D capture of faces for security documents of the future.

2020 3D Media

March 2008 – February 2012

2020 3D Media will research, develop and demonstrate novel forms of compelling entertainment experiences based on new technologies for the capture, production, networked distribution and display of three-dimensional sound and images.

Co-funded by the European Commission's Seventh Framework Programme.

PRIME

March 2008 – February 2011

Eight leading firms and research institutes have formed a consortium in order to develop trend-setting techniques and business-models for the implementation of 3D-media into cinema, TV and video games. Our project entitled "PRIME PRoduction- and Projection-Techniques for Immersive MEdia-" is state-aided by the German Federal Ministry for Economy and Technology (BMWi).

Co-funded by the German Federal Ministry for Economy and Technology (BMWi).

3D4YOU

February 2008 – January 2011

The 3D4YOU project will develop the key elements of a practical 3D television system, particularly, the definition of a 3D delivery format and guidelines for a 3D content creation process. The project will develop 3D capture techniques, convert captured content for broadcasting and develop 3D coding for delivery via broadcast, i.e. suitable to transmit and make public.

Co-funded by the European Commission's Seventh Framework Programme.

3DPresence

January 2008 – June 2010

The 3DPresence project will implement a multi-party, high-end 3D videoconferencing concept that will tackle the problem of transmitting the feeling of physical presence in real-time to multiple remote locations in a transparent and natural way. More briefly, 3DPresence does research and implements one of the very first true 3D Telepresence systems. In order to realize this objective, 3D Presence will go beyond the current state of the art by emphasizing the transmission, efficient coding and accurate representation of physical presence cues such as multiple user (auto)stereopsis, multi-party eye contact and multi-party gesture-based interaction.

Co-funded by the European Commission's Seventh Framework Programme.

RUSHES

February 2007 – July 2009

The overall aim of the RUSHES (Retrieval of multimedia Semantic units for enhanced reusability) project is to design, implement, and validate a system for indexing, accessing and delivering raw, unedited audio-visual footage known in broadcasting industry as "rushes". This system will have its viability tested by means of trials. The goal is to promote the reuse of such material, and especially its content in the production of new multimedia assets by offering semantic media search capabilities.

Co-funded by the European Commission's Sixth Framework Programme